I am trying to convert an ONNX model to HEF, but I am encountering an error when attempting to optimize my model. Below is the error message I am receiving:

(hailodfc) (base) ykl@ykl-virtual-machine:~/hailodfc$ /home/ykl/hailodfc/bin/python /home/ykl/hailodfc/onnx2har.py

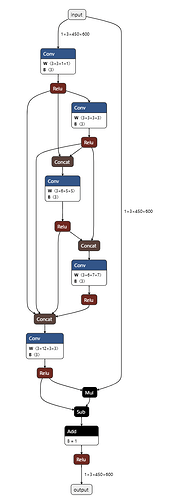

[info] Translation started on ONNX model Aod-Net

[info] Restored ONNX model Aod-Net (completion time: 00:00:00.01)

[info] Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.03)

[info] Start nodes mapped from original model: ‘input’: ‘Aod-Net/input_layer1’.

[info] End nodes mapped from original model: ‘Relu_17’.

[info] Translation completed on ONNX model Aod-Net (completion time: 00:00:00.39)

[info] Saved HAR to: /home/ykl/hailodfc/Aod-Net_hailo_model.har

[info] Loading model script commands to Aod-Net from string

[info] Starting Model Optimization

[warning] Reducing compression ratio to 0 because the number of parameters in the network is not large enough (0M and need at least 20M). Can be enforced using model_optimization_config(compression_params, auto_4bit_weights_ratio=0.400)

[info] Model received quantization params from the hn

[info] MatmulDecompose skipped

[info] Starting Mixed Precision

[info] Model Optimization Algorithm Mixed Precision is done (completion time is 00:00:00.09)

[info] Remove layer Aod-Net/conv2 because it has no effect on the network output

[info] Remove layer Aod-Net/concat2 because it has no effect on the network output

[info] Remove layer Aod-Net/conv4 because it has no effect on the network output

Traceback (most recent call last):

- File “/home/ykl/hailodfc/onnx2har.py”, line 39, in *

- runner.optimize(calib_dataset)*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_sdk_common/states/states.py”, line 16, in wrapped_func*

- return func(self, *args, *kwargs)

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_sdk_client/runner/client_runner.py”, line 2128, in optimize*

- self._optimize(calib_data, data_type=data_type, work_dir=work_dir)*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_sdk_common/states/states.py”, line 16, in wrapped_func*

- return func(self, *args, *kwargs)

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_sdk_client/runner/client_runner.py”, line 1970, in _optimize*

- self._sdk_backend.full_quantization(calib_data, data_type=data_type, work_dir=work_dir)*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/sdk_backend.py”, line 1125, in full_quantization*

- self._full_acceleras_run(self.calibration_data, data_type)*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/sdk_backend.py”, line 1319, in _full_acceleras_run*

- optimization_flow.run()*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/tools/orchestator.py”, line 306, in wrapper*

- return func(self, *args, *kwargs)

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/flows/optimization_flow.py”, line 335, in run*

- step_func()*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/tools/orchestator.py”, line 250, in wrapped*

- result = method(*args, *kwargs)

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/tools/subprocess_wrapper.py”, line 124, in parent_wrapper*

- func(self, *args, *kwargs)

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/flows/optimization_flow.py”, line 351, in step1*

- self.pre_quantization_structural()*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/tools/orchestator.py”, line 250, in wrapped*

- result = method(*args, *kwargs)

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/flows/optimization_flow.py”, line 384, in pre_quantization_structural*

- self._remove_dead_layers()*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/tools/orchestator.py”, line 250, in wrapped*

- result = method(*args, *kwargs)

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/flows/optimization_flow.py”, line 512, in _remove_dead_layers*

- algo.run()*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/algorithms/optimization_algorithm.py”, line 54, in run*

- return super().run()*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/algorithms/algorithm_base.py”, line 150, in run*

- self._run_int()*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/algorithms/dead_layers_removal.py”, line 79, in _run_int*

- new_input, new_output = self._infer_model_random_data(ref_input)*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/algorithms/dead_layers_removal.py”, line 115, in _infer_model_random_data*

- output = self._model(random_input)*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/keras/utils/traceback_utils.py”, line 70, in error_handler*

- raise e.with_traceback(filtered_tb) from None*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/acceleras/utils/distributed_utils.py”, line 122, in wrapper*

- res = func(self, *args, *kwargs)

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/acceleras/model/hailo_model/hailo_model.py”, line 1153, in build*

- self.compute_output_shape(input_shape)*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/acceleras/model/hailo_model/hailo_model.py”, line 1091, in compute_output_shape*

- return self.compute_and_verify_output_shape(input_shape, verify_layer_inputs_shape=False)*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/acceleras/model/hailo_model/hailo_model.py”, line 1125, in compute_and_verify_output_shape*

- layer_output_shape = layer.compute_output_shape(layer_input_shapes)*

- File “/home/ykl/hailodfc/lib/python3.10/site-packages/hailo_model_optimization/acceleras/hailo_layers/base_hailo_layer.py”, line 1572, in compute_output_shape*

- raise ValueError(*

ValueError: Inputs and input nodes not the same length in layer Aod-Net/concat1 - inputs: 4, nodes: 2

It appears the error stems from the mismatch between the number of inputs and input nodes in the layerAod-Net/concat1. Specifically, there are 4 inputs, but only 2 nodes are defined.

I am unsure how to resolve this issue. Has anyone encountered this problem before, or could someone provide a solution?

Your help will be greatly appreciated!