Hi everyone,

I am trying to convert a yolov11s-pose model (a .pt file) into a .hef file so it can run in a Raspberry Pi. I am very new to Hailo and this is my first time trying the conversion process. I am trying to follow the routine of .pt → .onnx → Hailo parser → Hailo optimize → Hailo compiler ->get .hef file.

During my very first time, I use the YOLO11s-pose.pt released by Ultralytics and convert it into .onnx using colab, following the tutorial on the guide here:Guide to using the DFC to convert a modified YoloV11 on Google Colab. Then I run with the following commands:

hailo parser onnx yolov11s-pose-modified.onnx with recommended endnode names

hailo optimize yolov11s-pose-modified.har --use-random-calib-set

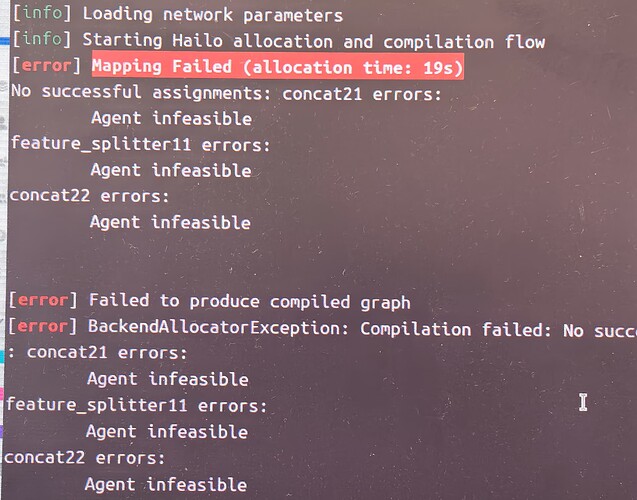

and hailo compiler yolov11s-pose_optimized.har, which gives me the error:

(Complete error message is at the bottom part)

I searched for previous post against this error and find this post: Concat17 error during custom yolov8m.pt compilation using DFC which suggests to split large concat operations into small ones.

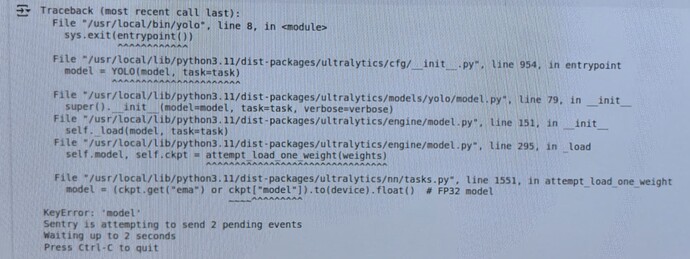

So I then dive into the source code(ultralytics package) and split the large concat operations that I could find. Then I found that the CLI used to convert .pt into .onnx this time no longer work and produces this error:

I then follow the code in the guide to convert my new .pt file into .onnx file in python and this time it works.

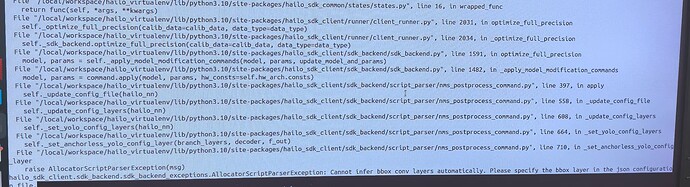

Then when I tried to use hailo parser onnx yolov11s-pose-modified.onnx to get .har file, the recommended endnodes does not work this time. I select a set of endnodes using netron (6 nodes in total) and succeed this time. However, when I am trying to optimize with command hailo parser onnx yolov11s-pose-modified.onnx , I got this error:

I am a little confused on if I am on the right track to solve the very first error(the concat21 one) and also on how to solve the ‘could not infer’ error above. I suppose a .script file might help? But I am not very sure on how the script file should be to solve my problem.

Any help is appreciated, thanks in advance!

The following are complete error messages for errors mentioned above:

[Here is the complete error message of the Concat21 error]

[info] Current Time: 10:48:45, 06/13/25

[info] CPU: Architecture: x86_64, Model: Intel(R) Xeon(R) Silver 4210R CPU @ 2.40GHz, Number Of Cores: 40, Utilization: 0.0%

[info] Memory: Total: 125GB, Available: 123GB

[info] System info: OS: Linux, Kernel: 5.15.0-139-generic

[info] Hailo DFC Version: 3.30.0

[info] HailoRT Version: 4.20.0

[info] PCIe: No Hailo PCIe device was found

[info] Running hailo compiler yolov11s-pose_optimized.har

[info] Compiling network

[info] To achieve optimal performance, set the compiler_optimization_level to “max” by adding performance_param(compiler_optimization_level=max) to the model script. Note that this may increase compilation time.

[info] Loading network parameters

[info] Starting Hailo allocation and compilation flow

[error] Mapping Failed (allocation time: 19s)

No successful assignments: concat21 errors:

Agent infeasible

feature_splitter11 errors:

Agent infeasible

concat22 errors:

Agent infeasible

[error] Failed to produce compiled graph

[error] BackendAllocatorException: Compilation failed: No successful assignments: concat21 errors:

Agent infeasible

feature_splitter11 errors:

Agent infeasible

concat22 errors:

Agent infeasible

and **[error message for the ‘cannot infer’ error]

[info] Current Time: 11:16:50, 06/13/25

[info] CPU: Architecture: x86_64, Model: Intel(R) Xeon(R) Silver 4210R CPU @ 2.40GHz, Number Of Cores: 40, Utilization: 0.2%

[info] Memory: Total: 125GB, Available: 123GB

[info] System info: OS: Linux, Kernel: 5.15.0-139-generic

[info] Hailo DFC Version: 3.30.0

[info] HailoRT Version: 4.20.0

[info] PCIe: No Hailo PCIe device was found

[info] Running hailo optimize yolov11s-pose-modified.har --use-random-calib-set --hw-arch hailo8l

[warning] hw_arch from HAR is hailo8 but client runner was initialized with hailo8l. Using hailo8l

[info] For NMS architecture yolov8 the default engine is cpu. For other engine please use the ‘engine’ flag in the nms_postprocess model script command. If the NMS has been added during parsing, please parse the model again without confirming the addition of the NMS, and add the command manually with the desired engine.

[info] The layer yolov11s-pose-modified/conv51 was detected as reg_layer.

Traceback (most recent call last):

File “/local/workspace/hailo_virtualenv/bin/hailo”, line 8, in

sys.exit(main())

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/tools/cmd_utils/main.py”, line 111, in main

ret_val = client_command_runner.run()

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_platform/tools/hailocli/main.py”, line 64, in run

ret_val = self._run(argv)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_platform/tools/hailocli/main.py”, line 111, in _run

return args.func(args)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/tools/optimize_cli.py”, line 113, in run

self._runner.optimize_full_precision(calib_data=dataset)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_common/states/states.py”, line 16, in wrapped_func

return func(self, *args, **kwargs)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/runner/client_runner.py”, line 2031, in optimize_full_precision

self._optimize_full_precision(calib_data=calib_data, data_type=data_type)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/runner/client_runner.py”, line 2034, in _optimize_full_precision

self._sdk_backend.optimize_full_precision(calib_data=calib_data, data_type=data_type)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/sdk_backend.py”, line 1591, in optimize_full_precision

model, params = self._apply_model_modification_commands(model, params, update_model_and_params)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/sdk_backend.py”, line 1482, in _apply_model_modification_commands

model, params = command.apply(model, params, hw_consts=self.hw_arch.consts)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/script_parser/nms_postprocess_command.py”, line 397, in apply

self._update_config_file(hailo_nn)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/script_parser/nms_postprocess_command.py”, line 558, in _update_config_file

self._update_config_layers(hailo_nn)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/script_parser/nms_postprocess_command.py”, line 608, in _update_config_layers

self._set_yolo_config_layers(hailo_nn)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/script_parser/nms_postprocess_command.py”, line 664, in _set_yolo_config_layers

self._set_anchorless_yolo_config_layer(branch_layers, decoder, f_out)

File “/local/workspace/hailo_virtualenv/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/script_parser/nms_postprocess_command.py”, line 710, in _set_anchorless_yolo_config_layer

raise AllocatorScriptParserException(msg)

hailo_sdk_client.sdk_backend.sdk_backend_exceptions.AllocatorScriptParserException: Cannot infer bbox conv layers automatically. Please specify the bbox layer in the json configuration file.