NOTE: This article is currently being verified with the latest DFC and will be updated later.

If the Data Flow Compiler meets the following conditions, it is possible to deploy two different models on a single device by sharing the input:

- The input data size is the same.

- The same preprocessing (such as normalization) is used.

- The generated HEF fits within a single context.

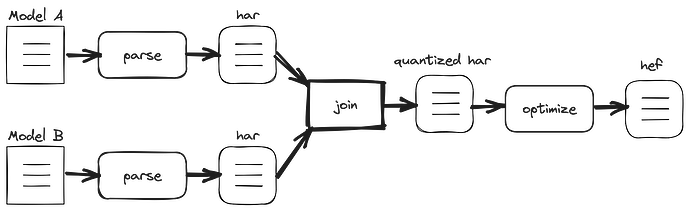

The overall procedure is roughly as follows:

- Perform parsing for each model.

- Join the models using joinAction.AUTO_JOIN_INPUTS.

- Perform quantization on the joined model.

- Compile the quantized joined model.

The pseudo code for combining models using the resnet_v1_18 available in the Hailo Model Zoo is as follows.

from hailo_sdk_client import Clientrunner

from hailo_sdk_client import exposed_definitions,JoinAction

model_name_1 = 'resnet_v1_18'

model_name_2 = 'resnet_v1_18'

hailo_model_har_name_1 = '{}_hailo_model.har'.format(model_name_1)

hailo_model_har_name_2 = '{}_hailo_model.har'.format(model_name_2)

# Create runner

runner1 = ClientRunner(hw_arch='hailo8', har_path=hailo_model_har_name_1)

runner2 = ClientRunner(hw_arch='hailo8', har_path=hailo_model_har_name_2)

# Join runner

runner1.join(runner2, scope1_name={'resnet_v1_18':'resnet_v1_18_1'}, scope2_name={'resnet_v1_18':'resnet_v1_18_2'}, join_action=JoinAction.AUTO_JOIN_INPUTS)

# Apply model script

alls_joined = '''

resnet_v1_18_1/normalization1 = normalization([123.675, 116.28, 103.53], [58.395, 57.12, 57.375], resnet_v1_18_1/input_layer1)

resnet_v1_18_2/normalization1 = normalization([123.675, 116.28, 103.53], [58.395, 57.12, 57.375], resnet_v1_18_2/input_layer1)

quantization_param({*output_layer*}, precision_mode=a8_w8)

'''

_ = runner1.load_model_script(alls_joined)

# Call Optimize to perform the optimization process

runner1.optimize(calib_dataset)

# Save the result state to a Quantized HAR file

quantized_model_har_path = '{}_quantized_model.joined.har'.format(model_name)

runner1.save_har(quantized_model_har_path)