For example, here is one option how I was able to run with one code all 3 detections. But it looks like a very weird implementation. Can you suggest or show some more correct examples?

Code id just a working example without any cleaning or documentation for testing purposes

import cv2

import numpy as np

from picamera2.devices import Hailo

def letterbox_image(img, size):

iw, ih = img.shape[1], img.shape[0]

w, h = size

scale = min(w / iw, h / ih)

nw, nh = int(iw * scale), int(ih * scale)

image_resized = cv2.resize(img, (nw, nh), interpolation=cv2.INTER_LINEAR)

image_padded = np.full((h, w, 3), 128, dtype=np.uint8)

top = (h - nh) // 2

left = (w - nw) // 2

image_padded[top:top + nh, left:left + nw, :] = image_resized

return image_padded, scale, left, top

def extract_detections(hailo_output, original_w, original_h, padded_w, padded_h, scale, pad_left, pad_top, class_names, threshold=0.5):

results = []

for class_id, detections in enumerate(hailo_output):

for detection in detections:

score = detection[4]

if score >= threshold:

y0, x0, y1, x1 = detection[:4]

x0_pixel = int((x0 * padded_w - pad_left) / scale)

y0_pixel = int((y0 * padded_h - pad_top) / scale)

x1_pixel = int((x1 * padded_w - pad_left) / scale)

y1_pixel = int((y1 * padded_h - pad_top) / scale)

bbox = (x0_pixel, y0_pixel, x1_pixel, y1_pixel)

results.append([class_names[class_id], bbox, score])

return results

def draw_detections(img, detections):

for class_name, bbox, score in detections:

x0, y0, x1, y1 = bbox

label = f"{class_name}"

cv2.rectangle(img, (x0, y0), (x1, y1), (0, 255, 0), 2)

cv2.putText(img, label, (x0, y0 - 10), cv2.FONT_HERSHEY_SIMPLEX, 1, (0, 0, 255), 2)

return img

if __name__ == "__main__":

with open("coco.txt", 'r', encoding="utf-8") as f:

class_names_yolo = f.read().splitlines()

hef_one = "/usr/share/hailo-models/yolov8s_h8l.hef"

license_plate_model = "./license_plate_model.hef"

hef_model_ocr = "./license_plate_ocr_new.hef"

class_names = list('0123456789ABCDEFGHIJKLMNOPQRSTUVWXYZ')

with Hailo(hef_one) as hailo:

img = cv2.imread("./cars/car_17.jpg")

original_w, original_h = img.shape[1], img.shape[0]

input_size = (640, 640)

padded_img, scale, pad_left, pad_top = letterbox_image(img, input_size)

frame = cv2.resize(padded_img, (hailo.get_input_shape()[1], hailo.get_input_shape()[0]))

results = hailo.run(frame)

car_detections = extract_detections(results[0], original_w, original_h, input_size[0], input_size[1], scale, pad_left, pad_top, class_names_yolo, threshold=0.5)

if car_detections:

car_x0, car_y0, car_x1, car_y1 = car_detections[0][1]

cropped_car_img = img[car_y0:car_y1, car_x0:car_x1]

cv2.imwrite("detected_car.jpg", cropped_car_img)

plate_detections = []

if car_detections:

with Hailo(license_plate_model) as hailo:

img_car = cv2.imread("./detected_car.jpg")

car_crop_w, car_crop_h = img_car.shape[1], img_car.shape[0]

input_size = (640, 640)

padded_img, scale, pad_left, pad_top = letterbox_image(img_car, input_size)

frame = cv2.resize(padded_img, (hailo.get_input_shape()[1], hailo.get_input_shape()[0]))

results = hailo.run(frame)

plate_detections = extract_detections(results[0], car_crop_w, car_crop_h, input_size[0], input_size[1], scale, pad_left, pad_top, ['license plate'], threshold=0.5)

if plate_detections:

plate_x0, plate_y0, plate_x1, plate_y1 = plate_detections[0][1]

cropped_plate_img = cropped_car_img[plate_y0:plate_y1, plate_x0:plate_x1]

cv2.imwrite("detected_plate.jpg", cropped_plate_img)

ocr_detections = []

if plate_detections:

with Hailo(hef_model_ocr) as hailo:

img_plate = cv2.imread("./detected_plate.jpg")

plate_crop_w, plate_crop_h = img_plate.shape[1], img_plate.shape[0]

input_size = (640, 640)

padded_img, scale, pad_left, pad_top = letterbox_image(img_plate, input_size)

frame = cv2.resize(padded_img, (hailo.get_input_shape()[1], hailo.get_input_shape()[0]))

results = hailo.run(frame)

ocr_detections = extract_detections(results[0], plate_crop_w, plate_crop_h, input_size[0], input_size[1], scale, pad_left, pad_top, class_names, threshold=0.5)

if ocr_detections:

detections_sorted = sorted(ocr_detections, key=lambda x: x[1][0])

license_plate_text = ''.join([det[0] for det in detections_sorted])

print(f"License Plate: {license_plate_text}")

final_img = img.copy()

final_img = draw_detections(final_img, car_detections)

if plate_detections:

plate_x0, plate_y0, plate_x1, plate_y1 = plate_detections[0][1]

adjusted_plate_detections = [[det[0],

(car_x0 + plate_x0 + det[1][0], car_y0 + plate_y0 + det[1][1],

car_x0 + plate_x0 + det[1][2], car_y0 + plate_y0 + det[1][3]),

det[2]] for det in ocr_detections]

final_img = draw_detections(final_img, adjusted_plate_detections)

cv2.imwrite("output_with_all_detections.jpg", final_img)

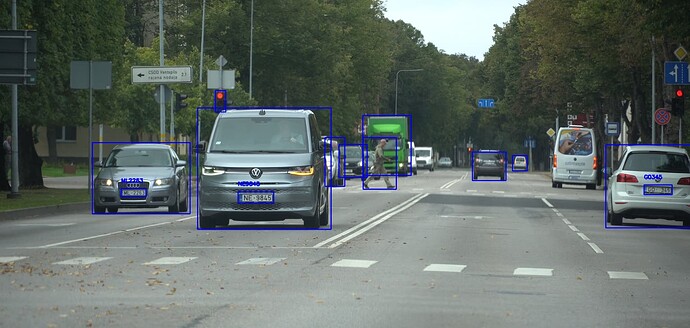

And some results how it works, if someone is interested

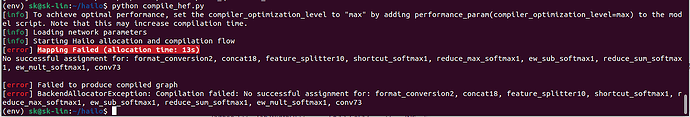

But sometimes I get this error, maybe someone knows something about it too?

terminate called after throwing an instance of 'std::system_error'

what(): Resource deadlock avoided

Aborted