User Guide: Running Multi-Stream Inference with DeGirum PySDK

DeGirum PySDK simplifies the integration of AI models into applications, enabling powerful inference workflows with minimal code. PySDK allows users to deploy models across cloud and edge devices with ease. For additional examples and hardware setup instructions, visit our Hailo Examples Repository.

Overview

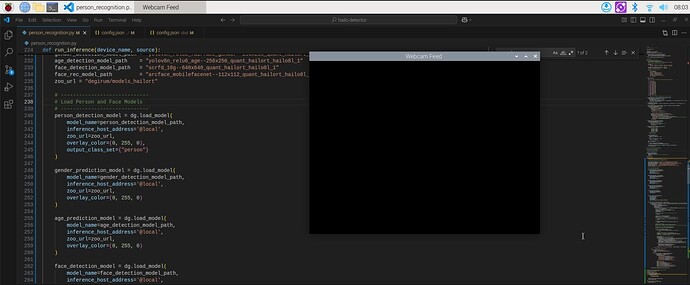

This guide demonstrates how to run AI inference on multiple video streams simultaneously using DeGirum PySDK. The script employs multithreading to process video streams independently, supporting both video files and webcam feeds. Each stream uses a different AI model, showcasing the flexibility and scalability of PySDK.

Example: Multi-Stream Inference

In this example:

- Traffic Camera Stream: Detects objects in a video file using the YOLOv8 object detection model.

- Webcam Stream: Detects faces in a live webcam feed using a face detection model.

Both streams are processed concurrently, and results are displayed in separate windows.

Code Reference

import threading

import degirum as dg

import degirum_tools

# choose inference host address

inference_host_address = "@cloud"

# inference_host_address = "@local"

# choose zoo_url

zoo_url = "degirum/models_hailort"

# zoo_url = "../models"

# set token

token = degirum_tools.get_token()

# token = '' # leave empty for local inference

# Define the configurations for video file and webcam

configurations = [

{

"model_name": "yolov8n_relu6_coco--640x640_quant_hailort_hailo8_1",

"source": "../assets/Traffic.mp4", # Video file

"display_name": "Traffic Camera"

},

{

"model_name": "yolov8n_relu6_face--640x640_quant_hailort_hailo8_1",

"source": 1, # Webcam index

"display_name": "Webcam Feed"

}

]

# Function to run inference on a video stream (video file or webcam)

def run_inference(model_name, source, inference_host_address, zoo_url, token, display_name):

# Load AI model

model = dg.load_model(

model_name=model_name,

inference_host_address=inference_host_address,

zoo_url=zoo_url,

token=token

)

with degirum_tools.Display(display_name) as output_display:

for inference_result in degirum_tools.predict_stream(model, source):

output_display.show(inference_result)

print(f"Stream '{display_name}' has finished.")

# Create and start threads

threads = []

for config in configurations:

thread = threading.Thread(

target=run_inference,

args=(

config["model_name"],

config["source"],

inference_host_address,

zoo_url,

token,

config["display_name"]

)

)

threads.append(thread)

thread.start()

# Wait for all threads to finish

for thread in threads:

thread.join()

print("All streams have been processed.")

How It Works

-

Model Configuration:

- Define a list of configurations for each stream, specifying the model name, video source, and display name.

-

Multithreading:

- Each configuration is processed in its thread, allowing multiple streams to run concurrently.

-

Inference Execution:

- The

run_inferencefunction loads the specified model usingdg.load_modeland processes the video source usingdegirum_tools.predict_stream.

- The

-

Result Display:

- Each stream’s output is displayed in a dedicated window using

degirum_tools.Display.

- Each stream’s output is displayed in a dedicated window using

Applications

- Monitoring multiple cameras in real-time for security and surveillance.

- Analyzing different video sources for smart infrastructure or retail applications.

- Demonstrating the parallel processing capabilities of DeGirum PySDK on various hardware setups.

Additional Resources

For more detailed examples and instructions on deploying models with Hailo hardware, visit the Hailo Examples Repository. This repository includes tailored scripts for optimizing AI workloads on edge devices.