LPR Systems: Background

A license plate recognition (LPR) system involves three essential stages, each critical for delivering accurate and reliable results. This guide focuses on the detection and recognition pipeline using YOLOv8 models, optimized for real-time applications.

License Plate Detection

The first step in an LPR system is detecting the presence and location of license plates in an image or video. The detection model outputs bounding boxes around license plates, along with confidence scores, indicating the likelihood of the detection being correct.

License Plate Cropping

After detecting license plates, the system isolates and prepares these regions for character recognition. The bounding boxes provided by the detection model are used to extract the plate regions from the original image. Plates are cropped precisely to focus only on the relevant area, eliminating distractions from the background.

License Plate Reading Using OCR

The cropped license plate images are passed to an OCR model, which extracts the alphanumeric characters present on the plates. We will use a YOLOv8 model adapted for OCR tasks in this guide. The detection results are sorted based on their left-to-right spatial arrangement, ensuring the output matches the plate’s reading order.

Stage 1: License Plate Detection

In the first stage, we run a license plate detection model on an input image to obtain the location of license plates along with confidence scores. We will use the below image as an example:

The code below illustrates how to load a license plate detection model, run inference on an image, and obtain the bounding boxes.

import degirum as dg, degirum_tools

import os

# choose inference host address

inference_host_address = "@cloud"

# inference_host_address = "@local"

# choose zoo_url

zoo_url = "degirum/models_hailort"

# zoo_url = "../models"

# set token

token = degirum_tools.get_token()

# token = '' # leave empty for local inference

# license plate detection model

lp_det_model_name = "yolov8n_relu6_lp--640x640_quant_hailort_hailo8l_1"

# image source

image_source = "../assets/Car.jpg"

# Load license plate detection model

lp_det_model = dg.load_model(

model_name=lp_det_model_name,

inference_host_address=inference_host_address,

zoo_url=zoo_url,

token=degirum_tools.get_token(),

overlay_color=[(255,255,0),(0,255,0)]

)

# run the model inference

detected_license_plates = lp_det_model(image_source)

print(detected_license_plates)

The results should look as below:

- bbox: [598.2862295231349, 590.4866805815361, 692.2712281276363, 636.6286038680815]

category_id: 0

label: Vehicle registration plate

score: 0.7370317578315735

We can visualize the detected license plates by overlaying the results onto the original image. DeGirum PySDK provides a convenient method inference_results.image_overlay where inference_results are the results returned by the model. Additionally, inference_results.image contains the original image and can be used to crop bounding boxes. We define a utility function to help visualize the results in a jupyter notebook environment.

import matplotlib.pyplot as plt

def display_images(images, title="Images", figsize=(15, 5)):

"""

Display a list of images in a single row using Matplotlib.

Parameters:

- images (list): List of images (NumPy arrays) to display.

- title (str): Title for the plot.

- figsize (tuple): Size of the figure.

"""

num_images = len(images)

fig, axes = plt.subplots(1, num_images, figsize=figsize)

if num_images == 1:

axes = [axes] # Make it iterable for a single image

for ax, image in zip(axes, images):

image_rgb = image[:, :, ::-1] # Convert BGR to RGB

ax.imshow(image_rgb)

ax.axis('off')

fig.suptitle(title, fontsize=16)

plt.tight_layout()

plt.show()

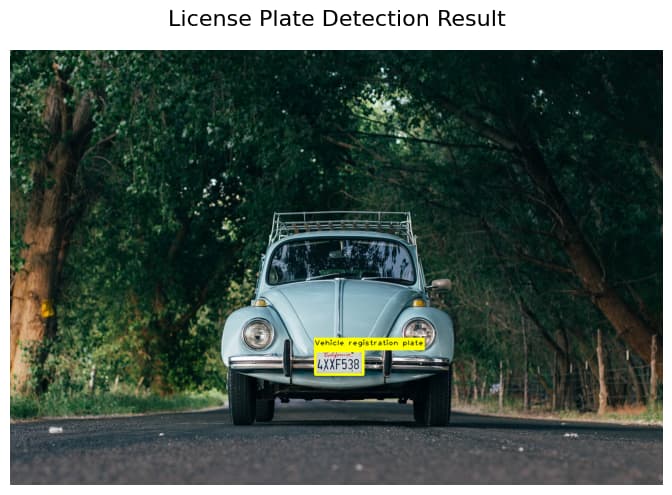

Using the above function, we can visualize the results of the face detection model.

display_images([detected_license_plates.image_overlay], title="License Plate Detection Result")

The output looks as below:

Stage 2: License Plate Cropping

The code below uses the detected bounding boxes to crop the image that can be used as an input to a license plate recognition recognition model.

def crop_images(image, results):

"""

Crops regions of interest (ROIs) from an image based on inference results.

Args:

image (numpy.ndarray): The input image as a NumPy array.

results (list of dict): A list of inference results, each containing:

- bbox (list of float): Bounding box in [x_min, y_min, x_max, y_max] format.

- category_id (int): Class ID (ignored in this function).

- label (str): Label of the detected object (ignored in this function).

- score (float): Confidence score (ignored in this function).

Returns:

list of numpy.ndarray: A list of cropped image regions.

"""

cropped_images = []

for result in results:

bbox = result.get('bbox')

if not bbox or len(bbox) != 4:

continue

# Convert bbox to integer pixel coordinates

x_min, y_min, x_max, y_max = map(int, bbox)

# Ensure the bounding box is within image bounds

x_min = max(0, x_min)

y_min = max(0, y_min)

x_max = min(image.shape[1], x_max)

y_max = min(image.shape[0], y_max)

# Crop the region of interest

cropped = image[y_min:y_max, x_min:x_max]

cropped_images.append(cropped)

return cropped_images

We can now visualize the cropped license plate(s) using the code below:

if detected_license_plates.results:

# List of cropped license plates

cropped_license_plates = crop_images(detected_license_plates.image, detected_license_plates.results)

# Display cropped license plates

display_images(cropped_license_plates, title="Cropped License Plates", figsize=(3, 2))

The resulting image looks as below:

Stage 3: License Plate Reading

The cropped license plate images can then be passed through an OCR model to read the license plate. A variety of models can be used for this purpose. In this guide, we will use a YOLOv8 model trained to recognize 10 digits and 26 letters in the English alphabet. The code below shows how to run the OCR model.

# license plate recognition model name

lp_rec_model_name = "yolov8n_relu6_lp_ocr--256x128_quant_hailort_hailo8l_1"

# loading the license plate recognition model

lp_rec_model = dg.load_model(

model_name=lp_rec_model_name,

inference_host_address=inference_host_address,

zoo_url=zoo_url,

token=degirum_tools.get_token(),

output_use_regular_nms=False,

output_confidence_threshold=0.1

)

# running the license plate recognition model on cropped license plate images

if detected_license_plates.results:

cropped_license_plates = crop_images(detected_license_plates.image, detected_license_plates.results)

for index, cropped_license_plate in enumerate(cropped_license_plates):

ocr_results = lp_rec_model.predict(cropped_license_plate)

print('License plate ', index, 'results')

print(ocr_results)

The results look as below:

License plate 0 results

- bbox: [51.80315942382812, 15.277219360351562, 62.83174365234375, 36.660669189453124]

category_id: 5

label: '5'

score: 0.6693987846374512

- bbox: [64.25210595703125, 15.429034240722656, 74.55282666015624, 36.775262084960936]

category_id: 3

label: '3'

score: 0.6693987846374512

- bbox: [3.9050979003906248, 15.847463256835937, 15.446136840820312, 37.02252612304687]

category_id: 4

label: '4'

score: 0.6693987846374512

- bbox: [16.167773071289062, 15.288739013671874, 27.34120227050781, 37.05546789550781]

category_id: 33

label: X

score: 0.6693987846374512

- bbox: [28.1813564453125, 15.265247680664062, 39.299054443359374, 37.21562841796875]

category_id: 33

label: X

score: 0.6693987846374512

- bbox: [39.95886975097656, 15.41164385986328, 51.054132080078126, 36.76698803710937]

category_id: 15

label: F

score: 0.6693987846374512

- bbox: [76.14064086914063, 15.345019104003907, 86.40907958984374, 36.35070825195312]

category_id: 8

label: '8'

score: 0.6693987846374512

For reading license plates, we expect the final result to be string of alphanumeric characters representing the license plate read from left to right. We can define a function that rearranges the detections as below:

def rearrange_detections(detections):

# Sort characters by leftmost x-coordinate

detections_sorted = sorted(detections, key=lambda det: det["bbox"][0])

# Concatenate labels to form the license plate string

return "".join([det["label"] for det in detections_sorted])

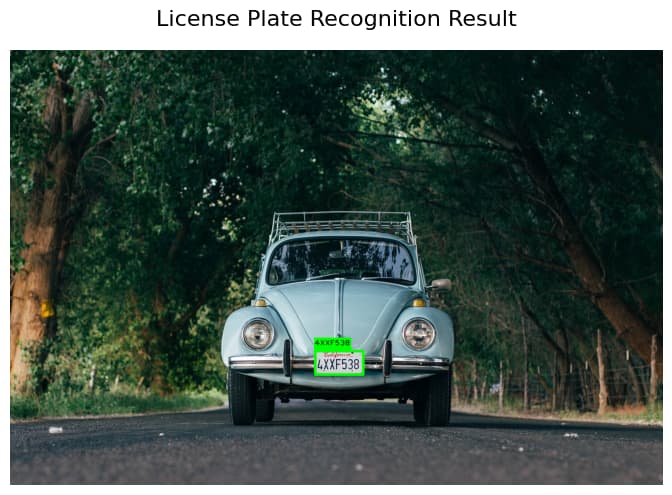

We can use the above function to rearrange the results of the OCR model, and then replace the label in license plate detection results with the OCR label so that we can visualize the final result easily. This is illustrated in the code below:

if detected_license_plates.results:

cropped_license_plates = crop_images(detected_license_plates.image, detected_license_plates.results)

for index, cropped_license_plate in enumerate(cropped_license_plates):

ocr_results = lp_rec_model.predict(cropped_license_plate)

ocr_label = rearrange_detections(ocr_results.results)

detected_license_plates.results[index]["label"] = ocr_label

display_images([detected_license_plates.image_overlay], title="License Plate Recognition Result")

The output looks as below:

Putting It All Together

We can combine all the code (including the utility functions) as below:

import degirum as dg, degirum_tools

import matplotlib.pyplot as plt

# utility function to display images

def display_images(images, title="Images", figsize=(15, 5)):

"""

Display a list of images in a single row using Matplotlib.

Parameters:

- images (list): List of images (NumPy arrays) to display.

- title (str): Title for the plot.

- figsize (tuple): Size of the figure.

"""

num_images = len(images)

fig, axes = plt.subplots(1, num_images, figsize=figsize)

if num_images == 1:

axes = [axes] # Make it iterable for a single image

for ax, image in zip(axes, images):

image_rgb = image[:, :, ::-1] # Convert BGR to RGB

ax.imshow(image_rgb)

ax.axis('off')

fig.suptitle(title, fontsize=16)

plt.tight_layout()

plt.show()

# utility function to crop images based on inference results

def crop_images(image, results):

"""

Crops regions of interest (ROIs) from an image based on inference results.

Args:

image (numpy.ndarray): The input image as a NumPy array.

results (list of dict): A list of inference results, each containing:

- bbox (list of float): Bounding box in [x_min, y_min, x_max, y_max] format.

- category_id (int): Class ID (ignored in this function).

- label (str): Label of the detected object (ignored in this function).

- score (float): Confidence score (ignored in this function).

Returns:

list of numpy.ndarray: A list of cropped image regions.

"""

cropped_images = []

for result in results:

bbox = result.get('bbox')

if not bbox or len(bbox) != 4:

continue

# Convert bbox to integer pixel coordinates

x_min, y_min, x_max, y_max = map(int, bbox)

# Ensure the bounding box is within image bounds

x_min = max(0, x_min)

y_min = max(0, y_min)

x_max = min(image.shape[1], x_max)

y_max = min(image.shape[0], y_max)

# Crop the region of interest

cropped = image[y_min:y_max, x_min:x_max]

cropped_images.append(cropped)

return cropped_images

# utility function to rearrange detections

def rearrange_detections(detections):

# Sort characters by leftmost x-coordinate

detections_sorted = sorted(detections, key=lambda det: det["bbox"][0])

# Concatenate labels to form the license plate string

return "".join([det["label"] for det in detections_sorted])

# choose inference host address

inference_host_address = "@cloud"

# inference_host_address = "@local"

# choose zoo_url

zoo_url = "degirum/models_hailort"

# zoo_url = "../models"

# set token

token = degirum_tools.get_token()

# token = '' # leave empty for local inference

# image source

image_source = "../assets/Car.jpg"

# model names

lp_det_model_name = "yolov8n_relu6_lp--640x640_quant_hailort_hailo8l_1"

lp_rec_model_name = "yolov8n_relu6_lp_ocr--256x128_quant_hailort_hailo8l_1"

# Load face detection and gender detection models

lp_det_model = dg.load_model(

model_name=lp_det_model_name,

inference_host_address=inference_host_address,

zoo_url=zoo_url,

token=degirum_tools.get_token(),

overlay_color=[(255,255,0),(0,255,0)]

)

lp_rec_model = dg.load_model(

model_name=lp_rec_model_name,

inference_host_address=inference_host_address,

zoo_url=zoo_url,

token=degirum_tools.get_token(),

output_use_regular_nms=False,

output_confidence_threshold=0.1

)

# Run license plate detection model

detected_license_plates = lp_det_model(image_source)

# run OCR model on cropped license plates

if detected_license_plates.results:

cropped_license_plates = crop_images(detected_license_plates.image, detected_license_plates.results)

for index, cropped_license_plate in enumerate(cropped_license_plates):

ocr_results = lp_rec_model.predict(cropped_license_plate)

ocr_label = rearrange_detections(ocr_results.results)

detected_license_plates.results[index]["label"] = ocr_label

# Display the final result

display_images([detected_license_plates.image_overlay], title="License Plate Recognition Result")

Closing Remarks

The YOLOv8n model used in this guide for license plate detection demonstrates good accuracy and robustness under diverse lighting conditions and camera angles. However, the YOLOv8n-based OCR model is still a work in progress. This is largely due to the variability in license plate formats worldwide, including differences in fonts, sizes, aspect ratios, and line configurations. With sufficient and diverse training data, we believe this approach can evolve into a highly practical solution. If you’re interested in contributing to this effort, particularly in data collection or model development, we’d love to hear from you.

For OCR, alternative models like PaddleOCR offer higher accuracy and greater robustness. These models excel at recognizing text across a wide range of scenarios but currently face challenges in being compiled for edge AI accelerators. We have successfully deployed PaddleOCR models using OpenVINO on Intel CPUs, GPUs, and NPUs, but further work is needed to make them compatible with additional hardware platforms.

Another option is the pre-trained LPRNet model for Hailo devices, which is pre-compiled for edge inference. While LPRNet performs well for numerical recognition, its scope is limited to recognizing only numeric license plates, restricting its applicability in regions with alphanumeric or multi-line plates.

Each approach has its strengths and limitations, and we continue to explore ways to enhance performance and compatibility. We welcome collaborations to expand these efforts and bring practical, high-performing solutions to real-world applications.