Hi Hailo Team,

I’m trying to run two YOLO-based HEF models at the same time on my Raspberry Pi 5 using the Hailo-8 AI Hat (M.2 interface).

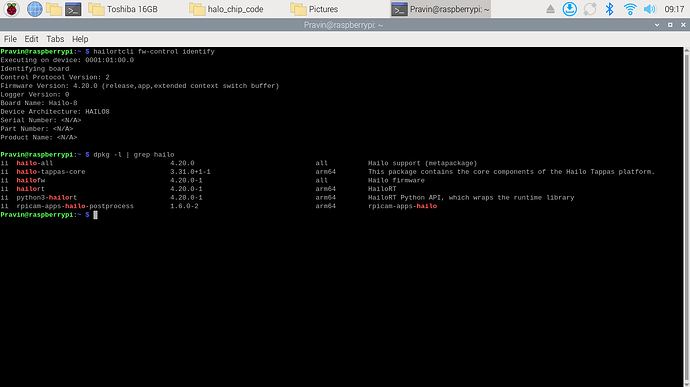

Setup Info:

-

Raspberry Pi 5 + Hailo-8

-

Python 3.11 (with virtual env)

-

Hailo packages installed:

hailo-all,hailort,python3-hailort,hailofw,hailo-tappas-core

-

HEF models:

model1.hefandmodel2.hef, created using the Hailo Model Zoo (ONNX to HEF) -

Both models run individually using

hailo-rpi5-examplesrepo

What I’m trying to do:

I want to run both models in parallel, either using multiprocessing or threading.

Code Attempts:

- Multiprocessing approach

Based on Hailo’s official tutorial:

“hailort/hailort/libhailort/bindings/python/platform/hailo_tutorials/notebooks/HRT_3_Infer_Pipeline_Inference_Multiple_Models_Tutorial.ipynb at master · hailo-ai/hailort · GitHub”

“”

code:

import numpy as np

from multiprocessing import Process

from hailo_platform import (HEF, VDevice, HailoStreamInterface, InferVStreams, ConfigureParams,

InputVStreamParams, OutputVStreamParams, InputVStreams, OutputVStreams, FormatType, HailoSchedulingAlgorithm)

# Define the function to run inference on the model

def infer(network_group, input_vstreams_params, output_vstreams_params, input_data):

rep_count = 100

with InferVStreams(network_group, input_vstreams_params, output_vstreams_params) as infer_pipeline:

for i in range(rep_count):

infer_results = infer_pipeline.infer(input_data)

def create_vdevice_and_infer(hef_path):

# Creating the VDevice target with scheduler enabled

params = VDevice.create_params()

params.scheduling_algorithm = HailoSchedulingAlgorithm.ROUND_ROBIN

params.multi_process_service = True

params.group_id = "SHARED"

with VDevice(params) as target:

configure_params = ConfigureParams.create_from_hef(hef=hef, interface=HailoStreamInterface.PCIe)

model_name = hef.get_network_group_names()[0]

batch_size = 2

configure_params[model_name].batch_size = batch_size

network_groups = target.configure(hef, configure_params)

network_group = network_groups[0]

# Create input and output virtual streams params

input_vstreams_params = InputVStreamParams.make(network_group, format_type=FormatType.FLOAT32)

output_vstreams_params = OutputVStreamParams.make(network_group, format_type=FormatType.UINT8)

# Define dataset params

input_vstream_info = hef.get_input_vstream_infos()[0]

print(input_vstream_info.shape)

image_height, image_width, channels = input_vstream_info.shape

num_of_frames = 10

low, high = 2, 20

# Generate random dataset

dataset = np.random.randint(low, high, (num_of_frames, image_height, image_width, channels)).astype(np.float32)

input_data = {input_vstream_info.name: dataset}

infer(network_group, input_vstreams_params, output_vstreams_params, input_data)

# Loading compiled HEFs:

first_hef_path = '/home/Pravin/halo_chip_code/hefs/model1.hef'

second_hef_path = '/home/Pravin/halo_chip_code/hefs/model2.hef'

first_hef = HEF(first_hef_path)

second_hef = HEF(second_hef_path)

hefs = [first_hef, second_hef]

infer_processes = []

# Configure network groups

for hef in hefs:

# Create infer process

infer_process = Process(target=create_vdevice_and_infer, args=(hef,))

infer_processes.append(infer_process)

print(f'Starting inference on multiple models using scheduler')

infer_failed = False

for infer_process in infer_processes:

infer_process.start()

for infer_process in infer_processes:

infer_process.join()

if infer_process.exitcode:

infer_failed = True

if infer_failed:

raise Exception("infer process failed")

print('Done inference')

“”

error:

cb.py

Starting inference on multiple models using scheduler

(640, 640, 3)

(640, 640, 3)

[HailoRT] [error] CHECK_SUCCESS failed with status=HAILO_INVALID_ARGUMENT(2)

[HailoRT] [error] CHECK_SUCCESS failed with status=HAILO_INVALID_ARGUMENT(2)

[HailoRT] [error] CHECK_SUCCESS failed with status=HAILO_INVALID_ARGUMENT(2)

[HailoRT] [error] CHECK_SUCCESS failed with status=HAILO_INVALID_ARGUMENT(2)

[HailoRT] [error] CHECK_SUCCESS failed with status=HAILO_INVALID_ARGUMENT(2)

[HailoRT] [error] CHECK_SUCCESS failed with status=HAILO_INVALID_ARGUMENT(2)

Process Process-1:

Traceback (most recent call last):

File “/usr/lib/python3.11/multiprocessing/process.py”, line 314, in _bootstrap

self.run()

File “/usr/lib/python3.11/multiprocessing/process.py”, line 108, in run

self._target(*self._args, **self._kwargs)

File “/home/Pravin/halo_chip_code/cb.py”, line 45, in create_vdevice_and_infer

infer(network_group, input_vstreams_params, output_vstreams_params, input_data)

File “/home/Pravin/halo_chip_code/cb.py”, line 10, in infer

with InferVStreams(network_group, input_vstreams_params, output_vstreams_params) as infer_pipeline:

File “/usr/lib/python3/dist-packages/hailo_platform/pyhailort/pyhailort.py”, line 930, in enter

self._infer_pipeline = _pyhailort.InferVStreams(self._configured_net_group._configured_network,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

hailo_platform.pyhailort._pyhailort.HailoRTStatusException: 2

Process Process-2:

Traceback (most recent call last):

File “/usr/lib/python3.11/multiprocessing/process.py”, line 314, in _bootstrap

self.run()

File “/usr/lib/python3.11/multiprocessing/process.py”, line 108, in run

self._target(*self._args, **self._kwargs)

File “/home/Pravin/halo_chip_code/cb.py”, line 45, in create_vdevice_and_infer

infer(network_group, input_vstreams_params, output_vstreams_params, input_data)

File “/home/Pravin/halo_chip_code/cb.py”, line 10, in infer

with InferVStreams(network_group, input_vstreams_params, output_vstreams_params) as infer_pipeline:

File “/usr/lib/python3/dist-packages/hailo_platform/pyhailort/pyhailort.py”, line 930, in enter

self._infer_pipeline = _pyhailort.InferVStreams(self._configured_net_group._configured_network,

^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

hailo_platform.pyhailort._pyhailort.HailoRTStatusException: 2

Traceback (most recent call last):

File “/home/Pravin/halo_chip_code/cb.py”, line 72, in

raise Exception(“infer process failed”)

Exception: infer process failed

- My own multithreaded version

I used VDevice() and tried to call create_network_group(), but it gave:

code:

import cv2

import time

import threading

import numpy as np

from hailo_platform import HEF, VDevice, HailoSchedulingAlgorithm, FormatType

# Configuration

models = [

("model1", "/home/Pravin/halo_chip_code/hefs/model1.hef", (640, 640)),

("model2", "/home/Pravin/halo_chip_code/hefs/model2.hef", (640, 640))

]

shared_frames = [None] * len(models)

frame_lock = threading.Lock()

frame_ready = threading.Event()

stop_flag = threading.Event()

def preprocess(frame, input_shape):

frame_resized = cv2.resize(frame, input_shape)

frame_transposed = frame_resized.transpose(2, 0, 1) # HWC to CHW

input_data = frame_transposed[np.newaxis, ...].astype(np.uint8)

return input_data

def run_model_thread(model_index, model_id, hef_path, input_shape, vdevice):

try:

print(f"[{model_id}] Initializing...")

hef = HEF(hef_path)

network_group = vdevice.create_network_group(hef)

input_name = network_group.get_input_stream_infos()[0].name

output_name = network_group.get_output_stream_infos()[0].name

input_vstream = network_group.get_input_vstream(input_name, format_type=FormatType.UINT8)

output_vstream = network_group.get_output_vstream(output_name)

print(f"[{model_id}] Ready for inference.")

while not stop_flag.is_set():

frame_ready.wait()

with frame_lock:

frame = shared_frames[model_index]

if frame is None:

continue

input_data = frame.copy()

frame_ready.clear()

input_vstream.write(input_data)

output_data = output_vstream.read()

print(f"[{model_id}] Output shape: {output_data.shape}, max value: {np.max(output_data)}")

except Exception as e:

print(f"[{model_id}] Error: {e}")

def video_capture_loop(model_shapes):

cap = cv2.VideoCapture(0)

if not cap.isOpened():

print("❌ Could not open video source")

return

try:

while not stop_flag.is_set():

ret, frame = cap.read()

if not ret:

break

with frame_lock:

for i, shape in enumerate(model_shapes):

shared_frames[i] = preprocess(frame, shape)

frame_ready.set()

cv2.imshow("Live Feed", frame)

if cv2.waitKey(1) & 0xFF == ord('q'):

stop_flag.set()

break

finally:

cap.release()

cv2.destroyAllWindows()

stop_flag.set()

frame_ready.set()

# Initialize shared virtual device

#vdevice = VDevice(device_count=1, scheduling_algorithm=HailoSchedulingAlgorithm.ROUND_ROBIN)

vdevice = VDevice()

# Launch model threads

threads = []

for i, (model_id, hef_path, input_shape) in enumerate(models):

t = threading.Thread(target=run_model_thread, args=(i, model_id, hef_path, input_shape, vdevice))

t.start()

threads.append(t)

# Start video input loop

video_capture_loop([shape for _, _, shape in models])

# Wait for threads to finish

for t in threads:

t.join()

print("✅ All threads complete. Inference stopped.")

error:

"

[model1] Initializing…

[model2] Initializing…

[model1] Error: ‘VDevice’ object has no attribute ‘create_network_group’

[model2] Error: ‘VDevice’ object has no attribute ‘create_network_group’

[ WARN:0@0.685] global ./modules/videoio/src/cap_gstreamer.cpp (2401) handleMessage OpenCV | GStreamer warning: Embedded video playback halted; module v4l2src0 reported: Failed to allocate required memory.

[ WARN:0@0.686] global ./modules/videoio/src/cap_gstreamer.cpp (1356) open OpenCV | GStreamer warning: unable to start pipeline

[ WARN:0@0.686] global ./modules/videoio/src/cap_gstreamer.cpp (862) isPipelinePlaying OpenCV | GStreamer warning: GStreamer: pipeline have not been created

![]() All threads complete. Inference stopped.

All threads complete. Inference stopped.

"

Screenshots:

- Hailo device and driver info (attached)

- HEF model folder (

model1.hef,model2.hef)

What I need help with:

- How can I correctly run two models in parallel on a single Hailo-8 with RPi5?

- Is

VDevicethe correct way for multi-model parallel execution? - Why is

create_network_group()not working onVDevice()? - Any working code example or official guidance for this setup?

Thanks for your support!

Pravin kumar R

additional screenshots: