Hi,

I tried retraining my yolov8m model with the Hailo Suite docker environment, but during the deployment on my Raspberry Pi 5, I encountered this error: error.

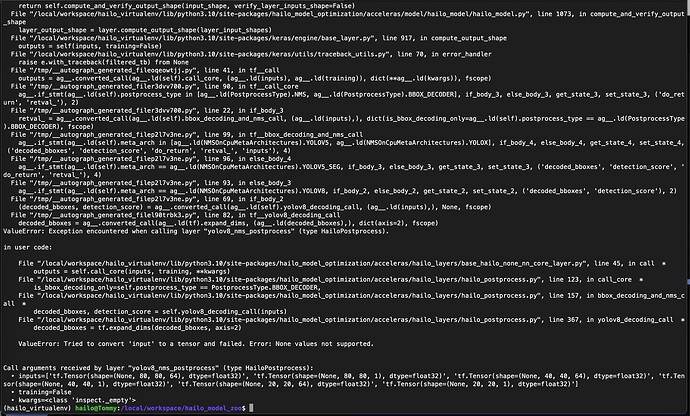

The solution seems to be to install the older version of DFC 3.27, which I installed, and during the retraining, a new error arose: [info] Translation started on ONNX model yolov8m [info] Restored ONNX model yolov8m (completion time: 00:00:01.30) [info] Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:02.94) [info] NMS structure of yolov8 (or equivalent architecture) was detected. [info] In order to use HailoRT post-processing capabilities, these end node names should be used: /model.22/cv2.0/cv2.0.2/Conv /model.22/cv3.0/cv3.0.2/Conv /model.22/cv2.1/cv2.1.2/Conv /model.22/cv3.1/cv3.1.2/Conv /model.22/cv2.2/cv2.2.2/Conv /model.22/cv3.2/cv3.2.2/Conv. [info] Start nodes mapped from original model: 'images': 'yolov8m/input_layer1'. [info] End nodes mapped from original model: '/model.22/cv2.0/cv2.0.2/Conv', '/model.22/cv3.0/cv3.0.2/Conv', '/model.22/cv2.1/cv2.1.2/Conv', '/model.22/cv3.1/cv3.1.2/Conv', '/model.22/cv2.2/cv2.2.2/Conv', '/model.22/cv3.2/cv3.2.2/Conv'. [info] Translation completed on ONNX model yolov8m (completion time: 00:00:05.00) [info] Saved HAR to: /local/shared_with_docker/yolov8m.har <Hailo Model Zoo INFO> Preparing calibration data... [info] Loading model script commands to yolov8m from /local/workspace/hailo_model_zoo/hailo_model_zoo/cfg/alls/generic/yolov8m.alls [info] Loading model script commands to yolov8m from string Traceback (most recent call last): File "/local/workspace/hailo_virtualenv/bin/hailomz", line 33, in <module> sys.exit(load_entry_point('hailo-model-zoo', 'console_scripts', 'hailomz')()) File "/local/workspace/hailo_model_zoo/hailo_model_zoo/main.py", line 122, in main run(args) File "/local/workspace/hailo_model_zoo/hailo_model_zoo/main.py", line 111, in run return handlers[args.command](args) File "/local/workspace/hailo_model_zoo/hailo_model_zoo/main_driver.py", line 250, in compile _ensure_optimized(runner, logger, args, network_info) File "/local/workspace/hailo_model_zoo/hailo_model_zoo/main_driver.py", line 91, in _ensure_optimized optimize_model( File "/local/workspace/hailo_model_zoo/hailo_model_zoo/core/main_utils.py", line 319, in optimize_model optimize_full_precision_model(runner, calib_feed_callback, logger, model_script, resize, input_conversion, classes) File "/local/workspace/hailo_model_zoo/hailo_model_zoo/core/main_utils.py", line 305, in optimize_full_precision_model runner.optimize_full_precision(calib_data=calib_feed_callback) File "/local/workspace/hailo_virtualenv/lib/python3.8/site-packages/hailo_sdk_common/states/states.py", line 16, in wrapped_func return func(self, *args, **kwargs) File "/local/workspace/hailo_virtualenv/lib/python3.8/site-packages/hailo_sdk_client/runner/client_runner.py", line 1597, in optimize_full_precision self._optimize_full_precision(calib_data=calib_data, data_type=data_type) File "/local/workspace/hailo_virtualenv/lib/python3.8/site-packages/hailo_sdk_client/runner/client_runner.py", line 1600, in _optimize_full_precision self._sdk_backend.optimize_full_precision(calib_data=calib_data, data_type=data_type) File "/local/workspace/hailo_virtualenv/lib/python3.8/site-packages/hailo_sdk_client/sdk_backend/sdk_backend.py", line 1284, in optimize_full_precision model, params = self._apply_model_modification_commands(model, params, update_model_and_params) File "/local/workspace/hailo_virtualenv/lib/python3.8/site-packages/hailo_sdk_client/sdk_backend/sdk_backend.py", line 1200, in _apply_model_modification_commands model, params = command.apply(model, params, hw_consts=self.hw_arch.consts) File "/local/workspace/hailo_virtualenv/lib/python3.8/site-packages/hailo_sdk_client/sdk_backend/script_parser/nms_postprocess_command.py", line 323, in apply self._update_config_file(hailo_nn) File "/local/workspace/hailo_virtualenv/lib/python3.8/site-packages/hailo_sdk_client/sdk_backend/script_parser/nms_postprocess_command.py", line 456, in _update_config_file self._update_config_layers(hailo_nn) File "/local/workspace/hailo_virtualenv/lib/python3.8/site-packages/hailo_sdk_client/sdk_backend/script_parser/nms_postprocess_command.py", line 498, in _update_config_layers self._set_yolo_config_layers(hailo_nn) File "/local/workspace/hailo_virtualenv/lib/python3.8/site-packages/hailo_sdk_client/sdk_backend/script_parser/nms_postprocess_command.py", line 533, in _set_yolo_config_layers raise AllocatorScriptParserException("Cannot infer bbox conv layers automatically. " hailo_sdk_client.sdk_backend.sdk_backend_exceptions.AllocatorScriptParserException: Cannot infer bbox conv layers automatically. Please specify the bbox layer in the json configuration file

Hi @JanDev,

Thanks for opening a new topic for your issue.

Could you please share with us the nms JSON lines that you have used for the optimization?

Do you mean the cfg/base json file?

I used a fresh installation and only installed the older compiler. It works fine with 3.28 but not with 3.27.

No, the JSON used for NMS configuration is passed as a path in the model script. If you are using the default ModelZoo model script, then this is the default NMS JSON.

The error you see happens because the JSON you are using lacks the bbox conv layer names.

What is the command that you are using for the compilation? Did you change any config files (yaml or json)?

The thing is that I didn’t change anything, so all json and yaml files are original. The command I use for the compilation is:

hailomz compile --ckpt /local/shared_with_docker/conv.onnx --calib-path /local/shared_with_docker/StateMix --yaml /local/workspace/hailo_model_zoo/hailo_model_zoo/cfg/networks/yolov8m.yaml --classes 8

Hi @JanDev,

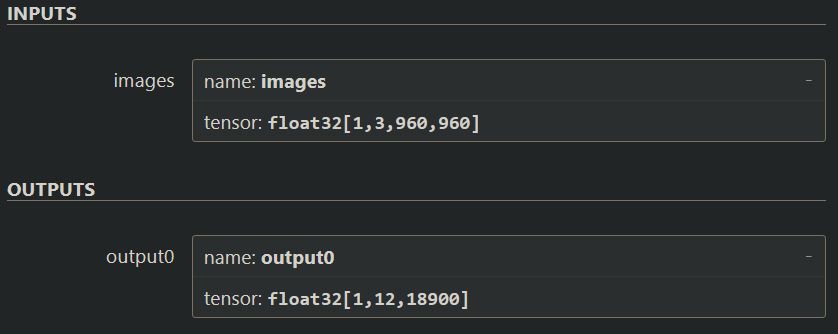

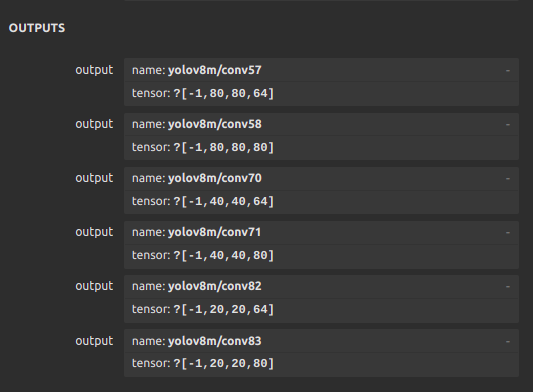

Could you please check that the model’s outputs are as follows?

To check you can open the parsed model with https://netron.app/

And just to confirm, the model was re-trained with our re-training docker, right?

Hi!

Sorry for the delay. I can’t upload the hef model to the website as it seems it doesn’t support it.

And yes, I did use the re-training docker image.

Btw, if it helps, here is the output of the onnx model from the DFC 3.28:

Where you able to solve it? im facing some similar issue trying to run

hailomz compile --ckpt /local/shared_with_docker/best.onnx --calib-path /local/shared_with_docker/licence-plate/valid/images --yaml hailo_model_zoo/cfg/networks/yolov8s.yaml

![Screenshot 2024-12-04 at 00.06.01|690x341]

(upload://dca0WgKKKtsBMRcGl98wVwwwQLM.png)

but im having the issue after the command

ValueError: Tried to convert 'input' to a tensor and failed. Error: None values not supported.