I was able to log a hailo_sdk.client.log with an exit point very similar to your log. Here’s mine:

2025-07-01 13:41:25,301 - INFO - parser.py:158 - Translation started on ONNX model yolov8s

2025-07-01 13:41:25,528 - INFO - parser.py:193 - Restored ONNX model yolov8s (completion time: 00:00:00.23)

2025-07-01 13:41:26,081 - INFO - parser.py:216 - Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.78)

2025-07-01 13:44:48,227 - INFO - parser.py:158 - Translation started on ONNX model yolov8s

2025-07-01 13:44:48,412 - INFO - parser.py:193 - Restored ONNX model yolov8s (completion time: 00:00:00.18)

2025-07-01 13:44:48,939 - INFO - parser.py:216 - Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.71)

2025-07-01 13:45:10,429 - INFO - parser.py:158 - Translation started on ONNX model yolov8s

2025-07-01 13:45:10,604 - INFO - parser.py:193 - Restored ONNX model yolov8s (completion time: 00:00:00.17)

2025-07-01 13:45:11,092 - INFO - parser.py:216 - Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.66)

2025-07-01 13:45:49,196 - INFO - parser.py:158 - Translation started on ONNX model yolov8s

2025-07-01 13:45:49,364 - INFO - parser.py:193 - Restored ONNX model yolov8s (completion time: 00:00:00.17)

2025-07-01 13:45:49,984 - INFO - parser.py:216 - Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.79)

2025-07-01 13:45:50,804 - INFO - onnx_translator.py:2482 - NMS structure of yolov8 (or equivalent architecture) was detected.

2025-07-01 13:45:50,804 - INFO - onnx_translator.py:2484 - In order to use HailoRT post-processing capabilities, these end node names should be used: /model.22/cv2.0/cv2.0.2/Conv /model.22/cv3.0/cv3.0.2/Conv /model.22/cv2.1/cv2.1.2/Conv /model.22/cv3.1/cv3.1.2/Conv /model.22/cv2.2/cv2.2.2/Conv /model.22/cv3.2/cv3.2.2/Conv.

2025-07-01 13:45:50,881 - INFO - parser.py:373 - Start nodes mapped from original model: 'images': 'yolov8s/input_layer1'.

2025-07-01 13:45:50,881 - INFO - parser.py:374 - End nodes mapped from original model: '/model.22/cv2.0/cv2.0.2/Conv', '/model.22/cv3.0/cv3.0.2/Conv', '/model.22/cv2.1/cv2.1.2/Conv', '/model.22/cv3.1/cv3.1.2/Conv', '/model.22/cv2.2/cv2.2.2/Conv', '/model.22/cv3.2/cv3.2.2/Conv'.

2025-07-01 13:45:50,957 - INFO - parser.py:288 - Translation completed on ONNX model yolov8s (completion time: 00:00:01.76)

2025-07-01 13:45:52,218 - INFO - client_runner.py:1831 - Saved HAR to: /home/wsl/yolov8s.har

2025-07-01 13:45:52,811 - INFO - client_runner.py:476 - Loading model script commands to yolov8s from /home/wsl/hailo_model_zoo-2.13/hailo_model_zoo/cfg/alls/generic/yolov8s.alls

2025-07-01 13:45:52,856 - INFO - client_runner.py:479 - Loading model script commands to yolov8s from string

2025-07-01 13:45:53,919 - IMPORTANT - sdk_backend.py:1009 - Starting Model Optimization

2025-07-01 13:47:03,698 - IMPORTANT - sdk_backend.py:1050 - Model Optimization is done

2025-07-01 13:47:04,759 - INFO - client_runner.py:1831 - Saved HAR to: /home/wsl/yolov8s.har

2025-07-01 13:47:04,762 - INFO - client_runner.py:476 - Loading model script commands to yolov8s from /home/wsl/hailo_model_zoo-2.13/hailo_model_zoo/cfg/alls/generic/yolov8s.alls

2025-07-01 13:47:04,824 - INFO - commands.py:4064 - ParsedPerformanceParam command, setting optimization_level(max=2)

2025-07-01 13:47:04,825 - INFO - client_runner.py:479 - Appending model script commands to yolov8s from string

2025-07-01 13:47:04,835 - INFO - commands.py:4064 - ParsedPerformanceParam command, setting optimization_level(max=2)

2025-07-01 16:43:01,918 - INFO - client_runner.py:1831 - Saved HAR to: /home/wsl/yolov8s.har

2025-07-01 17:02:34,806 - INFO - parser.py:158 - Translation started on ONNX model yolov8s

2025-07-01 17:02:34,965 - INFO - parser.py:193 - Restored ONNX model yolov8s (completion time: 00:00:00.16)

2025-07-01 17:02:35,634 - INFO - parser.py:216 - Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.83)

2025-07-01 17:02:36,579 - INFO - onnx_translator.py:2482 - NMS structure of yolov8 (or equivalent architecture) was detected.

2025-07-01 17:02:36,579 - INFO - onnx_translator.py:2484 - In order to use HailoRT post-processing capabilities, these end node names should be used: /model.22/cv2.0/cv2.0.2/Conv /model.22/cv3.0/cv3.0.2/Conv /model.22/cv2.1/cv2.1.2/Conv /model.22/cv3.1/cv3.1.2/Conv /model.22/cv2.2/cv2.2.2/Conv /model.22/cv3.2/cv3.2.2/Conv.

2025-07-01 17:02:36,694 - INFO - parser.py:373 - Start nodes mapped from original model: 'images': 'yolov8s/input_layer1'.

2025-07-01 17:02:36,694 - INFO - parser.py:374 - End nodes mapped from original model: '/model.22/cv2.0/cv2.0.2/Conv', '/model.22/cv3.0/cv3.0.2/Conv', '/model.22/cv2.1/cv2.1.2/Conv', '/model.22/cv3.1/cv3.1.2/Conv', '/model.22/cv2.2/cv2.2.2/Conv', '/model.22/cv3.2/cv3.2.2/Conv'.

2025-07-01 17:02:36,967 - INFO - parser.py:288 - Translation completed on ONNX model yolov8s (completion time: 00:00:02.16)

2025-07-01 17:02:38,331 - INFO - client_runner.py:1831 - Saved HAR to: /root/yolov8s.har

2025-07-01 17:02:38,996 - INFO - client_runner.py:476 - Loading model script commands to yolov8s from /home/wsl/hailo_model_zoo-2.13/hailo_model_zoo/cfg/alls/generic/yolov8s.alls

2025-07-01 17:02:39,043 - INFO - client_runner.py:479 - Loading model script commands to yolov8s from string

2025-07-01 17:02:42,257 - IMPORTANT - sdk_backend.py:1009 - Starting Model Optimization

2025-07-01 17:04:00,876 - IMPORTANT - sdk_backend.py:1050 - Model Optimization is done

2025-07-01 17:04:02,056 - INFO - client_runner.py:1831 - Saved HAR to: /root/yolov8s.har

2025-07-01 17:04:02,061 - INFO - client_runner.py:476 - Loading model script commands to yolov8s from /home/wsl/hailo_model_zoo-2.13/hailo_model_zoo/cfg/alls/generic/yolov8s.alls

2025-07-01 17:04:02,109 - INFO - commands.py:4064 - ParsedPerformanceParam command, setting optimization_level(max=2)

2025-07-01 17:04:02,110 - INFO - client_runner.py:479 - Appending model script commands to yolov8s from string

2025-07-01 17:04:02,118 - INFO - commands.py:4064 - ParsedPerformanceParam command, setting optimization_level(max=2)

2025-07-01 17:04:02,190 - ERROR - hailo_tools_runner.py:231 - PermissionError: [Errno 13] Permission denied: '/tmp/reflection.alls'

Notice how the very last line is different:

2025-07-01 17:04:02,190 - ERROR - hailo_tools_runner.py:231 - PermissionError: [Errno 13] Permission denied: ‘/tmp/reflection.alls’

To encounter this error, I did sudo su, activated the venv built using wsl, then ran the hailomz compile. This error persisted until I did a rm -f /tmp/reflection.alls. After removing that temp file, I was successfully able to compile the HEF.

As such, try running rm -f /tmp/reflection.alls and then your build command. Maybe even wipe out the entile tmp folder.

I received the permission error because my temp directory’s reflection.alls was created by my wsl account in wsl, and so root didn’t have permissions to deal with that file.

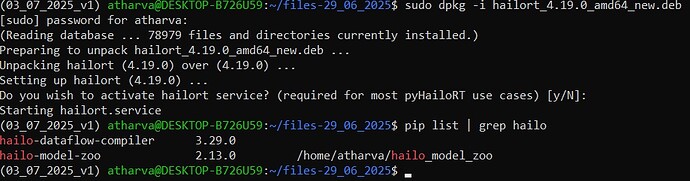

Environment was copied into /root/ from my home directory without further modifications.:

(hailodfc) root@TYANAPRIL232024:~# pip list | grep hailo

hailo-dataflow-compiler 3.29.0

hailo-model-zoo 2.13.0 /home/wsl/hailo_model_zoo-2.13

hailort 4.19.0