I’ve tested this using both hailomz and the dfc and experienced the same issue - complete degradation of the finetuned yolov5s.onnx model. The target hardware is hailo8-L

I more or less followed this guide on retraining yolov5 with a slight variation in that I only used 2 classes, not 3.

My yaml looked like below - I moved background to the end of the list (unlike shown in the guide) because it seemed to be causing some conflicts during training.

train: /workspace/local/datasets/coco-2017/train/images # train images (relative to 'path') 1500 images

val: /workspace/local/datasets/coco-2017/val/images # val images (relative to 'path') 1500 images

# number of classes

nc: 3

# class names

names: ['person', 'car', 'background']

There were some extra steps not mentioned in the guide, such as editing the alls and nms config, but overall the coco ‘finetuned’ model worked, best.onnx was getting around 45-50 mAP50 on the 2-class COCO subset val data.

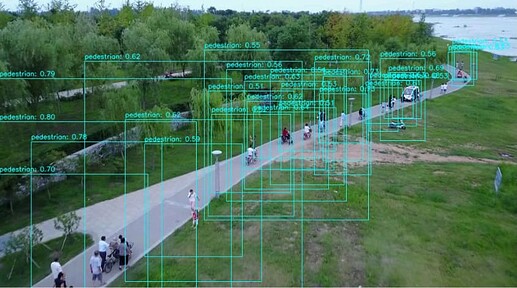

Once int8 optimised I plotted the boxes on some images from visdrone2019 (since that is the end goal) and I got some valid detections (though noticed if I ignored the conf score I’d get huge boxes spanning the entie image, but only at <<0.05 conf)

Since it wasn’t finetuned on that dataset, I wasn’t expecting great results but it seems to work at least - so I simply adapted the dataset yaml to the VisDrone subset. Then finetuned on VisDrone instead of the coco subset:

/workspace/yolov5# python train.py --img 640 --batch 16 --epochs 50 --data ../local/data_files/VisDrone.yaml --weights yolov5s.pt --cfg models/yolov5s.yaml

this is the output - complete degradation it seems.

I’m quite puzzled as to how I can get some reasonable results with the quantized coco-retrained model, yet the VisDrone-retrained model is completely lobotomized even at FP-Optimization.

Has anyone here any experience with VisDrone, or more generally small object detection and YOLOv5 (or any other model) that suffered such degradation which was absent in the same model for a different dataset?

Any suggestions on next steps?

I explored pretty much all of the options suggested in the docs as follows:

-

- Make sure there are at least 1024 images in the calibration dataset and machine with a GPU.

- Make sure there are at least 1024 images in the calibration dataset and machine with a GPU.

-

- Validate the accuracy of the model using SDK_FP_OPTIMIZED emulator to ensure pre and post processing are used correctly.

- FP optimized is also degraded much the same way

- FP optimized is also degraded much the same way

- Validate the accuracy of the model using SDK_FP_OPTIMIZED emulator to ensure pre and post processing are used correctly.

-

- Usage of BatchNormalization

- nothing in the docs on this, but I can see in the model arch there are BatchNorm nodes.

- nothing in the docs on this, but I can see in the model arch there are BatchNorm nodes.

- Usage of BatchNormalization

-

- Run the layer noise analysis tool to identify the source of degradation.

my entire model came in under 10dB, I still tried to ‘fix’ the worse performing layers, and ran again, which made the entire model even worse than before.

my entire model came in under 10dB, I still tried to ‘fix’ the worse performing layers, and ran again, which made the entire model even worse than before.

- Run the layer noise analysis tool to identify the source of degradation.

-

- If you have used compression_level, lower its value

haven’t tried this yet

haven’t tried this yet

- If you have used compression_level, lower its value

-

- Configure higher optimization_level in the model script

- level 4 is just as bad

- level 4 is just as bad

- Configure higher optimization_level in the model script

-

- Configure 16-bit output.

- seems pointless given FP-Optimized results are as bad

- seems pointless given FP-Optimized results are as bad

- Configure 16-bit output.

-

- Configure 16-bit on specific layers that are sensitive for quantization. Note that if the activation function is

- as above

- as above

- Configure 16-bit on specific layers that are sensitive for quantization. Note that if the activation function is

-

- Try to run with activation clipping

- again, this does nothing to improve it

- again, this does nothing to improve it

- Try to run with activation clipping

-

- Use more data and longer optimization process in Finetune

- I’m using

- I’m using post_quantization_optimization(finetune, policy=enabled, learning_rate=0.0001, epochs=8, dataset_size=4000)

- Use more data and longer optimization process in Finetune

-

- Use different loss type in Finetune

- given how bad perofrmance is this is not high in my list of attempted fixes unless someone has experience with good returns here

- given how bad perofrmance is this is not high in my list of attempted fixes unless someone has experience with good returns here

- Use different loss type in Finetune

-

- Use quantization aware training (QAT). For more information see QAT Tutorial

- at this point, thinking this is my only realistic option?

- at this point, thinking this is my only realistic option?

- Use quantization aware training (QAT). For more information see QAT Tutorial