Hi everyone,

I’m using Hailo AI Software Suite Version 2025-04 with a Raspberry Pi 5 and Raspberry Pi AI HAT. I trained a custom YOLOv8n model (only 1 class) using Ultralytics and exported it to ONNX using the following code:

model.export(format="onnx", imgsz=640, dynamic=False, simplify=True, opset=11)

Then I followed the standard Hailo Model Zoo steps to convert the ONNX model into a HEF file:

hailomz parse --hw-arch hailo8l --ckpt /mnt/workspace/customYolov8nbest.onnx yolov8n

hailomz optimize --hw-arch hailo8l --calib-path /mnt/workspace/calibration_images yolov8n

hailomz compile \

--hw-arch hailo8l \

yolov8n

The HEF file is successfully generated.

However, when I run the model on my target device (Raspberry Pi 5 + Hailo AI HAT), it does not detect any objects at all. The debug output from my inference code looks like this:

------------------- DEBUG -------------------

Raw output tensor: []

Max coordinate value: 0

Max score (col 4): 0

Shape: (0, 5)

Frame shape: (272, 480, 3)

Preprocessed shape: (3, 640, 640)

Input name: yolov8n/input_layer1

Output name: yolov8n/yolov8_nms_postprocess

Output tensor dtype: float64

Preprocessed dtype: float32

------------------------------------------------

So the output tensor has shape (0, 5), meaning no detections. Even though the image preprocessing works and the pipeline runs without crashing, the model returns empty outputs.

My questions:

- Could the issue be caused by an incorrect postprocessing or NMS layer during the export or compilation?

- Is there a specific configuration required for single-class YOLOv8 models?

- Could the

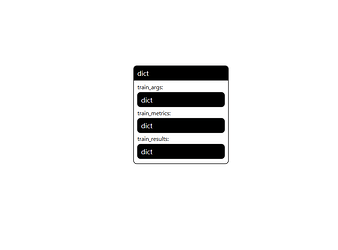

output_shapeor layer names be mismatched duringparse/optimize/compilesteps? - Should I explicitly set quantization parameters or data types (e.g., float32 vs uint8)?

- Has anyone encountered this issue with single-class models compiled for Hailo?

Any help would be appreciated ![]() I can also share the ONNX model or python file if needed.

I can also share the ONNX model or python file if needed.

Thanks!