Hey, I’m trying to convert a Tensorflow model I created to .hef format for inference on a Raspberry Pi. The model is based off of ssd_mobilenet_v2 – but I did add some modifications to the pipeline.config and ran it on a dataset of a few hundred images. Inference works great on the Tensorflow model. The idea is that input images of 640x640 are given and outputs are bounding boxes, confidence scores, etc. I’m on Windows using WSL, and in a Docker container. Downloaded the software suite image and everything is installed successfully. I’ll add the pipeline.config used to build the original Tensorflow model here:

model {

ssd {

num_classes: 1

image_resizer {

keep_aspect_ratio_resizer {

min_dimension: 640

max_dimension: 640

pad_to_max_dimension: true

}

}

feature_extractor {

type: "ssd_mobilenet_v2_fpn_keras"

depth_multiplier: 1.0

min_depth: 16

conv_hyperparams {

regularizer {

l2_regularizer {

weight: 3.9999998989515007e-05

}

}

initializer {

random_normal_initializer {

mean: 0.0

stddev: 0.009999999776482582

}

}

activation: RELU_6

batch_norm {

decay: 0.996999979019165

scale: true

epsilon: 0.0010000000474974513

}

}

use_depthwise: true

override_base_feature_extractor_hyperparams: true

fpn {

min_level: 3

max_level: 7

additional_layer_depth: 128

}

}

box_coder {

faster_rcnn_box_coder {

y_scale: 10.0

x_scale: 10.0

height_scale: 5.0

width_scale: 5.0

}

}

matcher {

argmax_matcher {

matched_threshold: 0.5

unmatched_threshold: 0.5

ignore_thresholds: false

negatives_lower_than_unmatched: true

force_match_for_each_row: true

use_matmul_gather: true

}

}

similarity_calculator {

iou_similarity {

}

}

box_predictor {

weight_shared_convolutional_box_predictor {

conv_hyperparams {

regularizer {

l2_regularizer {

weight: 3.9999998989515007e-05

}

}

initializer {

random_normal_initializer {

mean: 0.0

stddev: 0.009999999776482582

}

}

activation: RELU_6

batch_norm {

decay: 0.996999979019165

scale: true

epsilon: 0.0010000000474974513

}

}

depth: 128

num_layers_before_predictor: 4

kernel_size: 3

class_prediction_bias_init: -4.599999904632568

share_prediction_tower: true

use_depthwise: true

}

}

anchor_generator {

multiscale_anchor_generator {

min_level: 3

max_level: 7

anchor_scale: 4.0

aspect_ratios: 1.0

aspect_ratios: 2.0

aspect_ratios: 0.5

scales_per_octave: 2

}

}

post_processing {

batch_non_max_suppression {

score_threshold: 9.99999993922529e-09

iou_threshold: 0.6000000238418579

max_detections_per_class: 100

max_total_detections: 100

use_static_shapes: false

}

score_converter: SIGMOID

}

normalize_loss_by_num_matches: true

loss {

localization_loss {

weighted_smooth_l1 {

}

}

classification_loss {

weighted_sigmoid_focal {

gamma: 2.0

alpha: 0.25

}

}

classification_weight: 1.0

localization_weight: 1.0

}

encode_background_as_zeros: true

normalize_loc_loss_by_codesize: true

inplace_batchnorm_update: true

freeze_batchnorm: false

}

}

train_config {

batch_size: 2

data_augmentation_options {

random_horizontal_flip {

}

}

data_augmentation_options {

random_crop_image {

min_object_covered: 0.0

min_aspect_ratio: 0.75

max_aspect_ratio: 3.0

min_area: 0.75

max_area: 1.0

overlap_thresh: 0.0

}

}

data_augmentation_options {

random_rgb_to_gray {

}

}

data_augmentation_options {

random_adjust_brightness {

}

}

data_augmentation_options {

random_adjust_contrast {

}

}

sync_replicas: true

optimizer {

momentum_optimizer {

learning_rate {

cosine_decay_learning_rate {

learning_rate_base: 0.001

total_steps: 40000

warmup_learning_rate: 0.0009

warmup_steps: 2000

}

}

momentum_optimizer_value: 0.8999999761581421

}

use_moving_average: false

}

fine_tune_checkpoint: "checkpoint"

num_steps: 40000

startup_delay_steps: 0.0

replicas_to_aggregate: 8

max_number_of_boxes: 200

unpad_groundtruth_tensors: false

fine_tune_checkpoint_type: "detection"

fine_tune_checkpoint_version: V2

}

I converted the model to both savedmodel format and tflite format. I then successfully converted the model to .onnx format and ran the validator on the .onnx which came back valid. I’m having major issues converting the .onnx to .hef format now.

Things I’ve tried:

- hailomz compile --yaml model.yaml --ckpt ./model.onnx --hw-arch hailo8l --calib-path /images

Using this YAML file (I checked the input and output names are correct in Netron):

network:

network_name: ssd_mobilenet_v2_fpn_640x640_custom

paths:

network_path:

- /workspace/conversion_project/model.onnx

inputs:

- name: serving_default_input:0

shape: [1, 3, 640, 640]

outputs:

- name: StatefulPartitionedCall:1

shape: [1, 100, 4] # Assuming detection_boxes

- name: StatefulPartitionedCall:3

shape: [1, 100] # Assuming detection_scores

- name: StatefulPartitionedCall:0

shape: [1] # Assuming num_detections

- name: StatefulPartitionedCall:2

shape: [1, 100] # Assuming detection_classes

parser:

type: onnx

normalization_params:

normalize_in_net: true

mean_list: [127.5, 127.5, 127.5]

std_list: [127.5, 127.5, 127.5]

start_node_shapes:

serving_default_input:0: [1, 3, 640, 640]

nodes:

- serving_default_input:0

- - StatefulPartitionedCall:1

- StatefulPartitionedCall:3

- StatefulPartitionedCall:0

- StatefulPartitionedCall:2

preprocessing:

input_resolution: [640, 640]

padding_color: 0

postprocessing:

type: ssd_mobilenet_v2

num_classes: 1

nms_iou_threshold: 0.6

score_threshold: 1e-8

max_detections: 100

quantization:

calib_set: /images

The error message I get for this is:

<Hailo Model Zoo INFO> Start run for network ssd_mobilenet_v2_fpn_640x640_custom ...

<Hailo Model Zoo INFO> Initializing the hailo8l runner...

Traceback (most recent call last):

File "/local/workspace/hailo_virtualenv/bin/hailomz", line 33, in <module>

sys.exit(load_entry_point('hailo-model-zoo', 'console_scripts', 'hailomz')())

File "/local/workspace/hailo_model_zoo/hailo_model_zoo/main.py", line 122, in main

run(args)

File "/local/workspace/hailo_model_zoo/hailo_model_zoo/main.py", line 111, in run

return handlers[args.command](args)

File "/local/workspace/hailo_model_zoo/hailo_model_zoo/main_driver.py", line 248, in compile

_ensure_optimized(runner, logger, args, network_info)

File "/local/workspace/hailo_model_zoo/hailo_model_zoo/main_driver.py", line 73, in _ensure_optimized

_ensure_parsed(runner, logger, network_info, args)

File "/local/workspace/hailo_model_zoo/hailo_model_zoo/main_driver.py", line 108, in _ensure_parsed

parse_model(runner, network_info, ckpt_path=args.ckpt_path, results_dir=args.results_dir, logger=logger)

File "/local/workspace/hailo_model_zoo/hailo_model_zoo/core/main_utils.py", line 126, in parse_model

raise Exception(f"Encountered error during parsing: {err}") from None

Exception: Encountered error during parsing: Expecting value: line 1 column 1 (char 0)

Seems to be some kind of JSON parsing issue. Incredibly cryptic error.

- I tried running a custom script to compile the model:

from pathlib import Path

from hailo_sdk_client import ClientRunner

import os

def convert_onnx_to_hef(onnx_model_path, output_hef_path):

onnx_model_path = Path(onnx_model_path).resolve() # Resolve symlinks

print(f"ONNX path (Posix): {onnx_model_path.as_posix()}")

# Validate file access

if not onnx_model_path.exists():

raise FileNotFoundError(f"File {onnx_model_path} does not exist")

os.access(onnx_model_path, os.R_OK) # Explicit read check

client = ClientRunner()

# Using the suggested nodes from the error message

try:

translated_model = client.translate_onnx_model(

str(onnx_model_path),

"weed_model",

start_node_names=['concat'],

end_node_names=['Exp__1013', 'Slice__1007'],

net_input_shapes={'concat': [1, 640, 640, 3]} # Match input shape key with start_node_names

)

# Compile the translated model to HEF

print(f"Translation successful, compiling to HEF: {output_hef_path}")

client.compile_model(translated_model, target_platform="hailo8", output_path=output_hef_path)

print(f"Compilation successful, HEF saved to: {output_hef_path}")

return translated_model

except TypeError as e:

print(f"Parameter name error: {e}")

return None

except Exception as e:

print(f"Error during translation: {e}")

# Try alternative approach with just one output node at a time

print("Attempting fallback approach with individual output nodes...")

for output_node in ['Exp__1013', 'Slice__1007']:

try:

print(f"Trying with single output node: {output_node}")

translated_model = client.translate_onnx_model(

str(onnx_model_path),

"weed_model",

start_node_names=['concat'],

end_node_names=[output_node],

net_input_shapes={'concat': [1, 640, 640, 3]}

)

# If successful, compile and return

client.compile_model(translated_model, target_platform="hailo8",

output_path=f"{output_hef_path.rstrip('.hef')}_{output_node.replace(':', '_')}.hef")

print(f"Partial compilation successful with output node {output_node}")

except Exception as inner_e:

print(f"Failed with output node {output_node}: {inner_e}")

return None

if __name__ == "__main__":

# Use full path to ensure no ambiguity

input_model_path = "/workspace/conversion_project/model_simplified.onnx"

output_hef_path = "/workspace/conversion_project/weeds.hef"

convert_onnx_to_hef(input_model_path, output_hef_path)

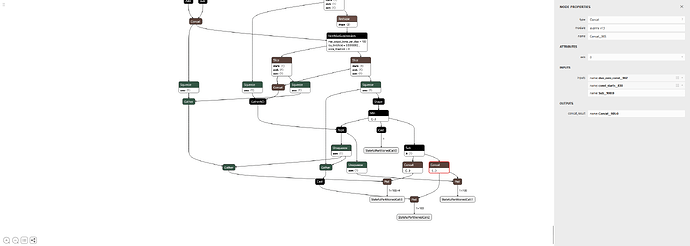

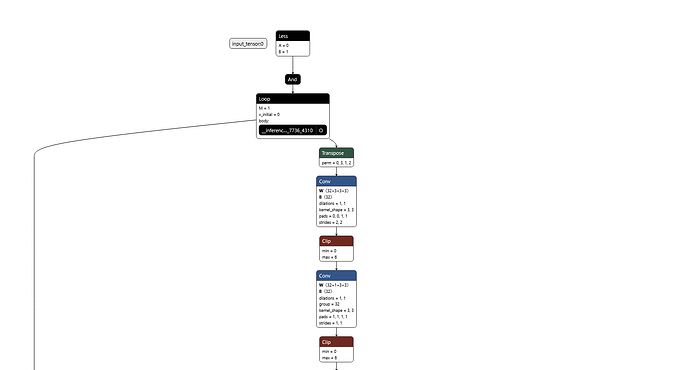

Again, I verified the input and output names are correct. In this case the model conversion runs silently for about 10 minutes before kicking out with the following error:

[info] Translation started on ONNX model weed_model

[info] Restored ONNX model weed_model (completion time: 00:00:00.07)

[info] Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.67)

[warning] ONNX shape inference failed: [ONNXRuntimeError] : 2 : INVALID_ARGUMENT : Invalid model. Node input 'ssd_mobile_net_v2_fpn_keras_feature_extractor/FeatureMaps/top_down/projection_3/BiasAdd;WeightSharedConvolutionalBoxPredictor/WeightSharedConvolutionalClassHead/ClassPredictor/separable_conv2d_4/depthwise;ssd_mobile_net_v2_fpn_keras_feature_extractor/FeatureMaps/top_down/projection_3/Conv2D;ssd_mobile_net_v2_fpn_keras_feature_extractor/FeatureMaps/top_down/projection_3/BiasAdd/ReadVariableOp' is not a graph input, initializer, or output of a previous node.

[info] Unable to simplify the model: cannot reshape array of size 4 into shape (1,1,640,1)

Error during translation: cannot reshape array of size 4 into shape (1,1,640,1)

Is there something obvious I’m missing or is there a simpler way to do this? My model is actually really simple (like 7MB) and I feel like it shouldn’t be this hard to get it working on the Hailo8L. Are there simpler/less problematic options like converting model to different intermediary format?

Thanks!