@KlausK,

I’ve installed DFC in Ubuntu 24.04 and it is having Nvidia GeForce GTX 1650. Is that fine? If yes, How to use it.

Also, these are logs I got when I executed via command cli:

hailo@DT-SGH649TZYF:/local/workspace$ hailomz compile --ckpt /local/workspace/chip_v2.onnx --calib-path /local/workspace/images/ --yaml /local/workspace/hailo_model_zoo/hailo_model_zoo/cfg/networks/yolov8n.yaml --classes 4

Start run for network yolov8n …

Initializing the hailo8 runner…

[info] Translation started on ONNX model yolov8n

[info] Restored ONNX model yolov8n (completion time: 00:00:00.05)

[info] Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.30)

[info] NMS structure of yolov8 (or equivalent architecture) was detected.

[info] In order to use HailoRT post-processing capabilities, these end node names should be used: /model.22/cv2.0/cv2.0.2/Conv /model.22/cv3.0/cv3.0.2/Conv /model.22/cv2.1/cv2.1.2/Conv /model.22/cv3.1/cv3.1.2/Conv /model.22/cv2.2/cv2.2.2/Conv /model.22/cv3.2/cv3.2.2/Conv.

[info] Start nodes mapped from original model: ‘images’: ‘yolov8n/input_layer1’.

[info] End nodes mapped from original model: ‘/model.22/cv2.0/cv2.0.2/Conv’, ‘/model.22/cv3.0/cv3.0.2/Conv’, ‘/model.22/cv2.1/cv2.1.2/Conv’, ‘/model.22/cv3.1/cv3.1.2/Conv’, ‘/model.22/cv2.2/cv2.2.2/Conv’, ‘/model.22/cv3.2/cv3.2.2/Conv’.

[info] Translation completed on ONNX model yolov8n (completion time: 00:00:00.98)

[info] Saved HAR to: /local/workspace/yolov8n.har

Preparing calibration data…

[info] Loading model script commands to yolov8n from /local/workspace/hailo_model_zoo/hailo_model_zoo/cfg/alls/generic/yolov8n.alls

[info] Loading model script commands to yolov8n from string

[info] Starting Model Optimization

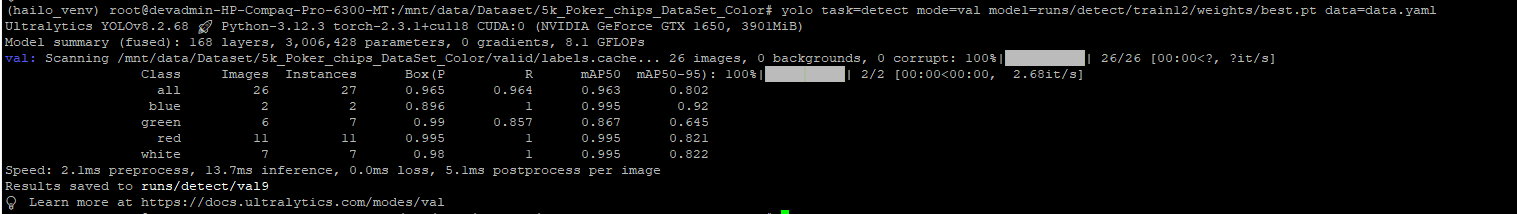

[warning] Reducing optimization level to 0 (the accuracy won’t be optimized and compression won’t be used) because there’s less data than the recommended amount (1024), and there’s no available GPU

[warning] Running model optimization with zero level of optimization is not recommended for production use and might lead to suboptimal accuracy results

[info] Model received quantization params from the hn

[info] Starting Mixed Precision

[info] Mixed Precision is done (completion time is 00:00:00.74)

[info] Layer Norm Decomposition skipped

[info] Starting Stats Collector

[info] Using dataset with 64 entries for calibration

Calibration: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████| 64/64 [00:27<00:00, 2.36entries/s]

[info] Stats Collector is done (completion time is 00:00:28.66)

[warning] The force_range command has been used, notice that its behavior was changed on this version. The old behavior forced the range on the collected calibration set statistics, but allowed the range to change during the optimization algorithms.

The new behavior forces the range throughout all optimization stages.

The old method could be restored by adding the flag weak_force_range_out=enabled to the force_range command on the following layers [‘yolov8n/conv42’, ‘yolov8n/conv53’, ‘yolov8n/conv63’]

[info] Starting Fix zp_comp Encoding

[info] Fix zp_comp Encoding is done (completion time is 00:00:00.00)

[info] matmul_equalization skipped

[info] Finetune encoding skipped

[info] Bias Correction skipped

[info] Adaround skipped

[info] Fine Tune skipped

[info] Layer Noise Analysis skipped

[info] Model Optimization is done

[info] Saved HAR to: /local/workspace/yolov8n.har

[info] Loading model script commands to yolov8n from /local/workspace/hailo_model_zoo/hailo_model_zoo/cfg/alls/generic/yolov8n.alls

[info] To achieve optimal performance, set the compiler_optimization_level to “max” by adding performance_param(compiler_optimization_level=max) to the model script. Note that this may increase compilation time.

[info] Adding an output layer after conv41

[info] Adding an output layer after conv42

[info] Adding an output layer after conv52

[info] Adding an output layer after conv53

[info] Adding an output layer after conv62

[info] Adding an output layer after conv63

[info] Loading network parameters

[warning] Output order different size

[info] Starting Hailo allocation and compilation flow

[info] Using Single-context flow

[info] Resources optimization guidelines: Strategy → GREEDY Objective → MAX_FPS

[info] Resources optimization params: max_control_utilization=75%, max_compute_utilization=75%, max_compute_16bit_utilization=75%, max_memory_utilization (weights)=75%, max_input_aligner_utilization=75%, max_apu_utilization=75%

[info] Using Single-context flow

[info] Resources optimization guidelines: Strategy → GREEDY Objective → MAX_FPS

[info] Resources optimization params: max_control_utilization=75%, max_compute_utilization=75%, max_compute_16bit_utilization=75%, max_memory_utilization (weights)=75%, max_input_aligner_utilization=75%, max_apu_utilization=75%

Validating context_0 layer by layer (100%)

● Finished

[info] Solving the allocation (Mapping), time per context: 59m 59s

Context:0/0 Iteration 4: Trying parallel mapping…

cluster_0 cluster_1 cluster_2 cluster_3 cluster_4 cluster_5 cluster_6 cluster_7 prepost

worker0 V V V V V V V V V

worker1 V V V V V V V V V

worker2 V V V V V V V V V

worker3 V V V V V V V V V

00:04

Reverts on cluster mapping: 0

Reverts on inter-cluster connectivity: 0

Reverts on pre-mapping validation: 0

Reverts on split failed: 0

[info] Iterations: 4

Reverts on cluster mapping: 0

Reverts on inter-cluster connectivity: 0

Reverts on pre-mapping validation: 0

Reverts on split failed: 0

[info] ±----------±--------------------±--------------------±-------------------+

[info] | Cluster | Control Utilization | Compute Utilization | Memory Utilization |

[info] ±----------±--------------------±--------------------±-------------------+

[info] | cluster_0 | 43.8% | 12.5% | 11.7% |

[info] | cluster_1 | 31.3% | 12.5% | 6.3% |

[info] | cluster_2 | 75% | 53.1% | 17.2% |

[info] | cluster_3 | 87.5% | 60.9% | 44.5% |

[info] | cluster_4 | 75% | 42.2% | 22.7% |

[info] | cluster_5 | 100% | 65.6% | 32% |

[info] | cluster_6 | 87.5% | 31.3% | 30.5% |

[info] | cluster_7 | 100% | 67.2% | 20.3% |

[info] ±----------±--------------------±--------------------±-------------------+

[info] | Total | 75% | 43.2% | 23.1% |

[info] ±----------±--------------------±--------------------±-------------------+

[info] Successful Mapping (allocation time: 24s)

[info] Compiling context_0…

[info] Bandwidth of model inputs: 9.375 Mbps, outputs: 4.35791 Mbps (for a single frame)

[info] Bandwidth of DDR buffers: 0.0 Mbps (for a single frame)

[info] Bandwidth of inter context tensors: 0.0 Mbps (for a single frame)

[info] Building HEF…

[info] Successful Compilation (compilation time: 8s)

[info] Saved HAR to: /local/workspace/yolov8n.har

HEF file written to yolov8n.hef

Please clarify these question:

- even though I’m having GPU it is saying that no GPU.

- Why during optimization time some highlighted are skipped, Can’t we avoid that skipping in cli command?

- Required GPU is hailo accelerator or Nvidia is fine?

Your input is very much required.

Thanks