@nina-vilela @omria

I am working with hailopython gstreamer element and I am processing yolov8_pose output in python.

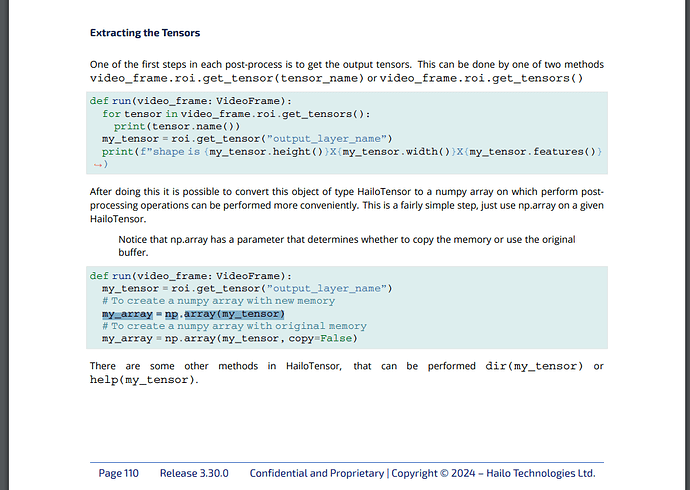

I found a way to extract numpy from tensors in TAPPAS DOCS.

But the format seems is different than the format in HailoAsyncInference…

hailonet results are in b/w 0-255

And Hailo Async Callback is float values(Possitive and Negative).

And I am unable to utilize the post process steps for yolov8_pose from here… Hailo-Application-Code-Examples/runtime/python/pose_estimation/pose_estimation_utils.py at main · hailo-ai/Hailo-Application-Code-Examples · GitHub

from typing import Dict

import gi

import numpy as np

gi.require_version('Gst', '1.0')

from gi.repository import Gst

import hailo

from post_process import PoseEstPostProcessing

def run(frame):

"""

Run Post Processing Steps on Hailo Raw Detections.

Resources:

https://github.com/hailo-ai/tappas/blob/master/core/hailo/plugins/python/hailo_python_api_sanity.py

Args:

frame: gsthailo.video_frame.VideoFrame

(https://github.com/hailo-ai/tappas/blob/master/core/hailo/python/gsthailo/video_frame.py).

Returns:

Gst.FlowReturn.OK

"""

print('--------CALLBACK STARTED------------------')

post_process = PoseEstPostProcessing(

max_detections=50,

score_threshold=0.1,

nms_iou_thresh=0.65,

regression_length=15,

strides=[8, 16, 32]

)

# roi = frame.roi

# buffer = frame.buffer

# video_info = frame.video_info

# tensors = roi.get_tensors()

# objects = roi.get_objects()

try:

roi = frame.roi

raw_tensors = roi.get_tensors() # List[hailo.HailoTensor]

objects = roi.get_objects()

tensors: Dict[str, np.array] = {}

for t in raw_tensors:

# print(tensor.name()) # Returns output layer name

layer_name = t.name()

tensor = roi.get_tensor(layer_name)

tensor_np = np.array(tensor)

tensor_np = tensor_np.reshape(1, *tensor_np.shape)

tensors[layer_name] = tensor_np

print(tensor_np)

if len(tensors) != 9:

print("WARNING: tensors does not match with post process step")

return

else:

# result = post_process.post_process(tensors, 640, 640, 1)

result = post_process.post_process(tensors, 640, 640, 1, 17, normalize=True)

# print(result['bboxes'])

# hailo_detections = []

# for (bbox, score, keypts, joint_score) in zip(result['bboxes'], result['scores'], result['keypoints'], result['joint_scores']):

# xmin, ymin, w, h = [float(x) for x in bbox]

# bbox = hailo.HailoBBox(xmin, ymin, w, h)

# detection = hailo.HailoDetection(bbox, 0, score)

# hailo_points = [] # List[hailo.HailoPoint]

# for pt in keypts:

# hailo_points.append(hailo.HailoPoint(pt[0], pt[1], joint_score))

# landmarks = hailo.HailoLandmarks('yolo', hailo_points, 0.0)

# detection.add_object(landmarks)

# roi.add_objects(hailo_detections)

except Exception as err:

print("Error ", err)

return

print('+++++++++++++++++++CALLBACK STARTED++++++++++++++++++++++')

return Gst.FlowReturn.OK

And due to inconsitent outputs post processing is affected and get NaN in boxes.

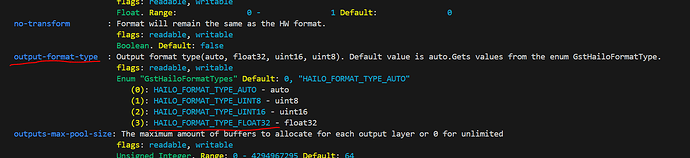

I have also tried passing output-format-type in hailonet. Because in HailoApplicationCode the output is set to FLOAT32. See here(Hailo-Application-Code-Examples/runtime/python/pose_estimation/pose_estimation.py at a10db4d6799a4355dd71dc7bb1e99887757fc199 · hailo-ai/Hailo-Application-Code-Examples · GitHub)

This is what i tried…

But this also does not work…