Hi., I’m Intrested, to know more in detail and what approach should I prefer. I am working with 2 cameras on rpi5 trying to detect and estimate a precise point of a object in video.

I am currently experimenting with pose model. But I assume this will not be much accurate in case of overlapping object. So I am thinking to use 2 stage inference. First network will be obb(it seems best way for my usecase, maybe segmentation can also help). Then i want to crop the detected objects(max 4 objects). And make a tile of detected objects then pushed to yolov8 pose detection network. This will detect my required point(i think precisely). 15fps is ideal for my purpose. And also little(not more than 300ms) bit delay is acceptable.

I am new to the ML. So, less knowledge of models in depth.

From my perspective what i have to use and understand are…

- Training (know a little bit and can do)

- Conversion to onxx (know a little bit and can do)

- Convert to har (know a little bit and can do)

- Quantization and optimization (know a little bit and can do)

- Compile to hef (know a little bit and can do)

- How to write post processing steps(i am completely unsure how to start)

- Custom Gstreamer plugin(I have tried but not able to register(unable to load), tried to follow tappas plugins design aswell)

- Object tracker(I can use hailotracker) but want to maintain timing of first detection. (So no sure what should i do)

So my questions are,

- Is there anything else i need to know other than mentioned above.

- What are good resources to create gstreamer plugins. As i explained i have to make tiles by cropping and masking detection. So i will have to write code. I want to build in c++, because i want to make process more speedy.

- Any other recommendation on my approach.

- How exactly i should use hailonet for 2 hefs. I have find something

tappas/docs/pipelines/parallel_networks.rst at master · hailo-ai/tappas · GitHub

tappas/docs/pipelines/single_network.rst at master · hailo-ai/tappas · GitHub

From What I know hailo internally manage interface to access device allow us to use multiple instance of same device virtually. And Correct me if I am wrong.

I am intrested in merging obb and pose har to single hef.(Mentioned in Above Image). Should I prefer merge option or should I keep 2 hefs.

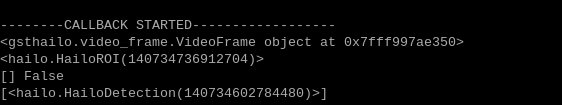

Till Now, I have manage to work with just yolov8 pose part (It’s working but unable to post process correctly my points in gstreamer pipeline. See here the Issue Hey I want to build my own custom postprocessing .so - #7 by saurabh )