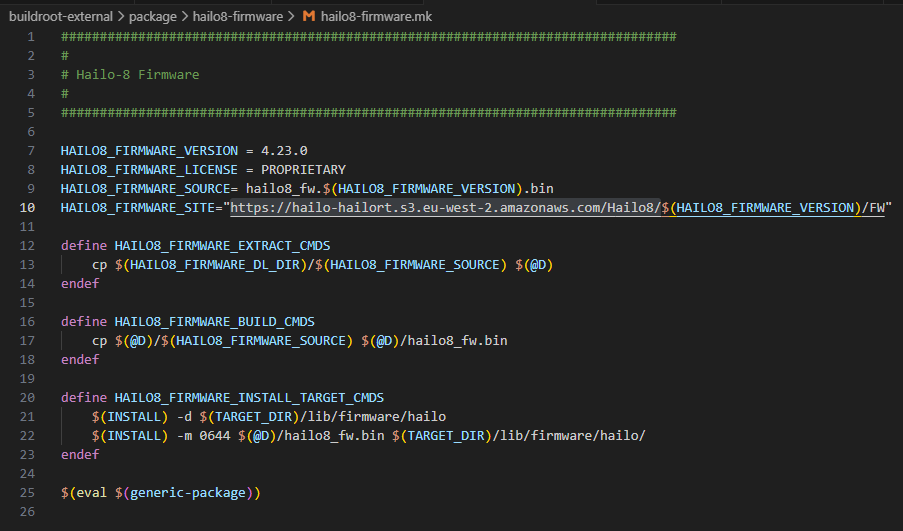

Hey Mathias, so I’m running the Hailo 8L (13 TOPS) on 4 cameras (DS-2CD1383G2-LIUF/SL - Network Cameras - Hikvision Global).

I tried a number of different setups, to optimize for my use case (which is face detection in near-ground, but still getting car detection on mid / far-ground).

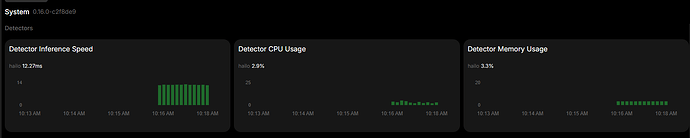

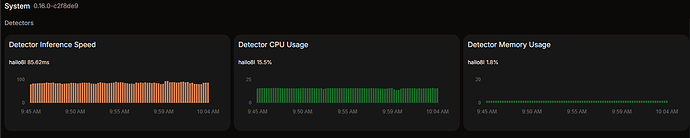

Initially I ran the default yolo model in frigate with my detect stream running at 720p, and 4k on my recording / audio stream. I was getting inference times ~17ms, CPU was never above 20%, and RAM stayed stable ~45% (expected as I’m running several other addons as well). However I found that I wasn’t getting the detections that I was hoping for on mid / far ground. So I tried several of the yolov11 models (the l, m, n, s, and x variants). Inference times went up accordingly and I found that the l variant gave me the best detections with an acceptable inference time ~75ms.

I was now getting good detections on near / mid-ground, but nothing further. Also face recognition wasn’t really picking up anything.

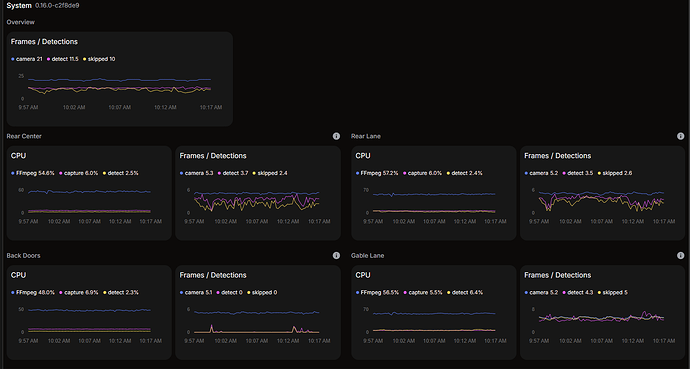

So next I just threw everything at it and gave it the 4k streams for the detect role. As expected RAM and CPU went through the roof (both 95% constantly) with 80%+ ffmpeg use for each camera.

This basically made frigate unusable, as detections weren’t happening most of the time due to the sheer volume of frames it was trying to sort through.

So I tinkered with it until I settled on setting the main stream of the cameras to roughly 2k (2688 * 1520, this was an inbuilt option, why it wasn’t 2k is beyond me).

I’m now getting detections near / mid / far (which are ridiculously accurate), as well as face detection on near ground (pretty accurate ~80%), and lpr on near ground.

The trade off is that my Pi5 is running at ~70% CPU and ~80% RAM usage pretty constantly. I’m considering buying another one to run homeassistant on, and dedicating the one with the Hailo device to Frigate (but this then defeats the purpose of the initial post).

The Hailo8l device itself is not nearly strained (as below). It’s being used, but there’s no doubt that it could handle at least another 2 processes of the same magnitude with little stress.

The cameras are showing high ffmpeg usage, but I haven’t noticed that it’s affecting performance.

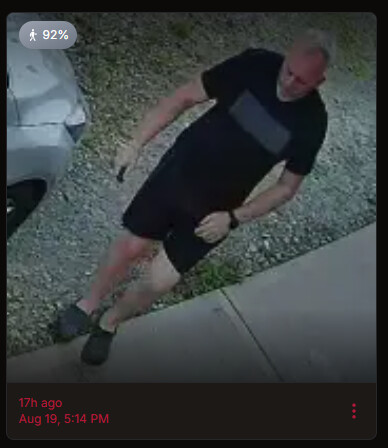

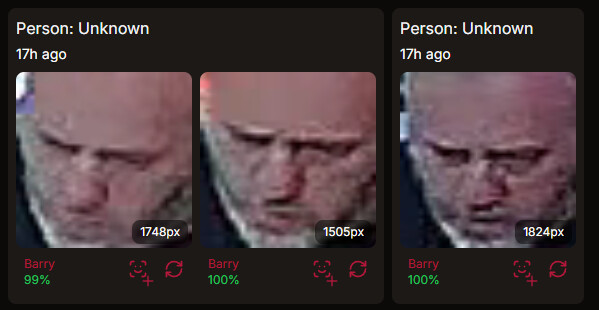

And for reference, these are some of the detections.

- Car near / mid ground (~10m)

- Facial recognition near ground (2-5m)