Hey,

I am having issues optimizing my har. After parsing my model I found that some dimensions in the har don’t align.

"best_mae_convnext_tiny_jhu_simp_is/normalization2": {

"type": "normalization",

"input": ["best_mae_convnext_tiny_jhu_simp_is/layer_normalization2"],

"output": ["best_mae_convnext_tiny_jhu_simp_is/fc1"],

"input_shapes": [[-1, 256, 480, 96]],

"output_shapes": [[-1, 256, 480, 96]],

"original_names": ["/backbone/features.1/features.1.0/block/block.2/LayerNormalization"],

"compilation_params": {},

"quantization_params": {},

"params": {

"elementwise_add": false,

"activation": "linear"

}

},

"best_mae_convnext_tiny_jhu_simp_is/fc1": {

"type": "dense",

"input": ["best_mae_convnext_tiny_jhu_simp_is/normalization2"],

"output": ["best_mae_convnext_tiny_jhu_simp_is/fc2"],

"input_shapes": [[-1, 1, 1, 11796480]],

"output_shapes": [[-1, 1, 1, 384]],

"original_names": ["/backbone/features.1/features.1.0/block/block.3/MatMul", "/backbone/features.1/features.1.0/block/block.3/Add", "/backbone/feat>

"compilation_params": {},

"quantization_params": {},

"params": {

"kernel_shape": [96, 384],

"batch_norm": false,

"activation": "gelu"

}

},

It seems like there is supposed to be a flattening layer in between the two.

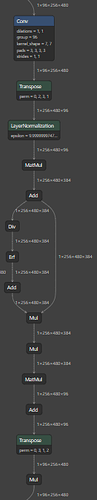

In Netron this part looks like this:

As you can see ther are no explicit flattening layers.

Has anyone encountered a similar issue or have any insights into how this dimension mismatch is handled? Is there an implicit flattening operation happening somewhere that’s not reflected in the HAR file? Any help or pointers would be greatly appreciated!

Thanks in advance!