Hey,

after successfully parsing my ONNX model, I’ve tried to optimize it and recieved this error message:

hailo_sdk_common.hailo_nn.exceptions.UnsupportedModelError: Invalid kernel shape for dense layer best_mae_convnext_tiny_ucf_simp_is/fc1 (translated from /backbone/features.1/features.1.0/block/block.2/LayerNormalization). Kernel input features: 96, Input features: 11796480

After this I looked into the har file and noticed that there is a mismatch in output and input dimensions:

"best_mae_convnext_tiny_jhu_simp_is/normalization2": {

"type": "normalization",

"input": ["best_mae_convnext_tiny_jhu_simp_is/layer_normalization2"],

"output": ["best_mae_convnext_tiny_jhu_simp_is/fc1"],

"input_shapes": [[-1, 256, 480, 96]],

"output_shapes": [[-1, 256, 480, 96]],

"original_names": ["/backbone/features.1/features.1.0/block/block.2/LayerNormalization"],

"compilation_params": {},

"quantization_params": {},

"params": {

"elementwise_add": false,

"activation": "linear"

}

},

"best_mae_convnext_tiny_jhu_simp_is/fc1": {

"type": "dense",

"input": ["best_mae_convnext_tiny_jhu_simp_is/normalization2"],

"output": ["best_mae_convnext_tiny_jhu_simp_is/fc2"],

"input_shapes": [[-1, 1, 1, 11796480]],

"output_shapes": [[-1, 1, 1, 384]],

"original_names": ["/backbone/features.1/features.1.0/block/block.3/MatMul", "/backbone/features.1/features.1.0/block/block.3/Add", "/backbone/feat>

"compilation_params": {},

"quantization_params": {},

"params": {

"kernel_shape": [96, 384],

"batch_norm": false,

"activation": "gelu"

}

},

I’ve tried to resolve this by adding this to my modelscript:

pre_quantization_optimization(defuse, layers=fc1, num_splits=96, defuse_type=INPUT_FEATURES)

This produced the following error message:

Traceback (most recent call last):

File "/venv/bin/hailo", line 8, in <module>

sys.exit(main())

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/tools/cmd_utils/main.py", line 111, in main

ret_val = client_command_runner.run()

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/tools/cmd_utils/base_utils.py", line 68, in run

return self._run(argv)

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/tools/cmd_utils/base_utils.py", line 89, in _run

return args.func(args)

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/tools/optimize_cli.py", line 113, in run

self._runner.optimize_full_precision(calib_data=dataset)

File "/venv/lib/python3.10/site-packages/hailo_sdk_common/states/states.py", line 16, in wrapped_func

return func(self, *args, **kwargs)

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/runner/client_runner.py", line 2031, in optimize_full_precision

self._optimize_full_precision(calib_data=calib_data, data_type=data_type)

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/runner/client_runner.py", line 2034, in _optimize_full_precision

self._sdk_backend.optimize_full_precision(calib_data=calib_data, data_type=data_type)

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/sdk_backend.py", line 1602, in optimize_full_precision

model, params = self._run_post_fuser(model, params, update_model_and_params)

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/sdk_backend.py", line 1648, in _run_post_fuser

post_fuser.run()

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/post_fuser/post_fuser.py", line 76, in run

self._model = algo.run()

File "/venv/lib/python3.10/site-packages/hailo_model_optimization/algorithms/algorithm_base.py", line 150, in run

self._run_int()

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/post_fuser/algorithms/input_features_defuse.py", line 26, in _run_int

act_dict = self._defuse_input_features()

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/post_fuser/algorithms/input_features_defuse.py", line 158, in _defuse_input_features

self._move_fused_slice_params(

File "/venv/lib/python3.10/site-packages/hailo_sdk_client/post_fuser/algorithms/fuser_algorithm.py", line 66, in _move_fused_slice_params

reshaped_src_kernel = src_kernel.reshape(pred_output_shape[1:] + [f_out])

ValueError: cannot reshape array of size 36864 into shape (256,480,96,384)

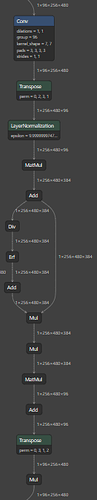

The relevant section looks like this in netron:

I’m not sure what to do at this point.

Thank you for your help in advance.