Hi Hailo

Currently, I want to run an I420 input image(540, 640) to yolov5s model(640, 640).

The image flow I’m considering is..

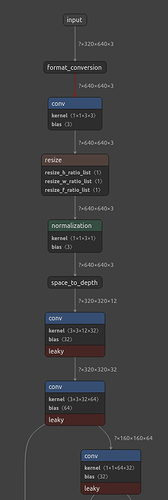

image[540, 640] → i420 to hailo yuv → yuv to rgb → resize [640, 640] → yolov5s model.

However, to change the input image format, it seems impossible to set the input shape to (540, 640) using the hailo parser onnx --tensor-shapes

Additionally, modifying net_input_shapes the method provided in the Hailo tutorial doesn’t seem to work either.

And here is the model script that I used

model_script_commands = [

‘normalization1 = normalization([0.0, 0.0, 0.0], [255.0, 255.0, 255.0])\n’

‘change_output_activation(sigmoid)\n’

‘resize_input1 = resize(resize_shapes=[640,640])\n’

‘yuv_to_rgb1 = input_conversion(yuv_to_rgb)\n’

‘format_conversion = input_conversion(input_layer1, i420_to_hailo_yuv, emulator_support = False)\n’

‘nms_postprocess(“./yolov5s_personface.json”, yolov5, engine=cpu)\n’

‘post_quantization_optimization(finetune, policy=enabled, learning_rate=0.0001, epochs=4, dataset_size=10, loss_factors=[1.0, 1.0, 1.0], loss_types=[l2rel, l2rel, l2rel], loss_layer_names=[conv70, conv63, conv55])\n’

]

Thanks