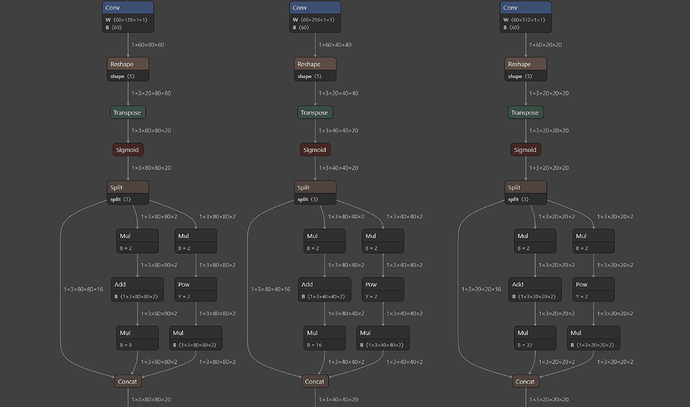

I would like to compile a YOLOV5s model without NMS so that the confidence and IoU thresholds can be easily configured during runtime without interruptions. I have the following model graph:

If I select the 3 conv layers as the end_node_names when compiling the HEF, the compilation is successful, however my model output is not scaled correctly and contains both positive and negative values, I’m assuming this is because the following add/mul/pow operations are being lost.

However, when selected the 3 Concat layers as the end nodes, the compilation fails with the following errors:

[info] Simplified ONNX model for a parsing retry attempt (completion time: 00:00:01.40)

Traceback (most recent call last):

File "/app/omnipro_venv/lib/python3.9/site-packages/hailo_sdk_client/sdk_backend/parser/parser.py", line 235, in translate_onnx_model

parsing_results = self._parse_onnx_model_to_hn(

File "/app/omnipro_venv/lib/python3.9/site-packages/hailo_sdk_client/sdk_backend/parser/parser.py", line 316, in _parse_onnx_model_to_hn

return self.parse_model_to_hn(

File "/app/omnipro_venv/lib/python3.9/site-packages/hailo_sdk_client/sdk_backend/parser/parser.py", line 367, in parse_model_to_hn

fuser = HailoNNFuser(converter.convert_model(), net_name, converter.end_node_names)

File "/app/omnipro_venv/lib/python3.9/site-packages/hailo_sdk_client/model_translator/translator.py", line 83, in convert_model

self._create_layers()

File "/app/omnipro_venv/lib/python3.9/site-packages/hailo_sdk_client/model_translator/edge_nn_translator.py", line 40, in _create_layers

self._add_direct_layers()

File "/app/omnipro_venv/lib/python3.9/site-packages/hailo_sdk_client/model_translator/edge_nn_translator.py", line 163, in _add_direct_layers

raise ParsingWithRecommendationException(

hailo_sdk_client.model_translator.exceptions.ParsingWithRecommendationException: Parsing failed. The errors found in the graph are:

UnsupportedModelError in op /model.24/Add: In vertex /model.24/Add_input the constant value shape (1, 3, 80, 80, 2) must be broadcastable to the output shape [80, 80, 6]

UnsupportedModelError in op /model.24/Mul_3: In vertex /model.24/Mul_3_input the constant value shape (1, 3, 80, 80, 2) must be broadcastable to the output shape [80, 80, 6]

UnsupportedModelError in op /model.24/Add_1: In vertex /model.24/Add_1_input the constant value shape (1, 3, 40, 40, 2) must be broadcastable to the output shape [40, 40, 6]

UnsupportedModelError in op /model.24/Mul_7: In vertex /model.24/Mul_7_input the constant value shape (1, 3, 40, 40, 2) must be broadcastable to the output shape [40, 40, 6]

UnsupportedModelError in op /model.24/Add_2: In vertex /model.24/Add_2_input the constant value shape (1, 3, 20, 20, 2) must be broadcastable to the output shape [20, 20, 6]

UnsupportedModelError in op /model.24/Mul_11: In vertex /model.24/Mul_11_input the constant value shape (1, 3, 20, 20, 2) must be broadcastable to the output shape [20, 20, 6]

Please try to parse the model again, using these end node names: /model.24/Sigmoid_2, /model.24/Sigmoid_1, /model.24/Sigmoid

Is there something I can do to accomplish my goal of getting correctly scaled model output WITHOUT any non max suppression?