Hello, you can find all of the software downloads here after you’ve signed in:

Thanks for your notebooks! It is really helpful will also work for yolov8s model?

Also how did you know what to put in the nms layer json? or which layers to put in the quantization script?

it will work for that. You need to verify the endpoints. You can use netron app to check the endpoints. and for nms json use this

can i use opset version as 14?

@trieut415 When trying to run inference I got:

Condition 'input_tensor->shape()[ 1 ] == 4 + m_OutputNumClasses' is not met: input_tensor->shape()[ 1 ] is 1, 4 + m_OutputNumClasses is 6

I strugling on it..waste already a lot of time.

Hi @user131

From the error message, it looks like it is from DeGirum PySDK. Please see User Guide 3: Simplifying Object Detection on a Hailo Device Using DeGirum PySDK

Hi! I have been successfully compiled few times for hailo8l.

Now while trying to do the same for hailo8 I see strange compiling logs which not changes for a while.

Yolo model is same, all parameters is same except chosen_hw_arch = “hailo8”

Previously I used hailo8l

I found out one interesting think.

This is logs from starting compiling for hailo8l

[info] ClientRunner initialized successfully.

[info] Loading network parameters

[info] Starting Hailo allocation and compilation flow

[info] Adding an output layer after conv46

[info] Adding an output layer after conv47

[info] Adding an output layer after conv56

[info] Adding an output layer after conv57

[info] Building optimization options for network layers...

[info] Successfully built optimization options - 3s 375ms

[info] Trying to compile the network in a single context

[info] Single context flow failed: Recoverable single context error

[info] Building optimization options for network layers...

[info] Successfully built optimization options - 6s 579ms

[info] Using Multi-context flow

[info] Resources optimization params: max_control_utilization=100%, max_compute_utilization=100%, max_compute_16bit_utilization=100%, max_memory_utilization (weights)=85%, max_input_aligner_utilization=100%, max_apu_utilization=100%

[info] Finding the best partition to contexts...

[.........<==>...........................] Duration: 00:02:16

Found valid partition to 3 contexts

[info] Searching for a better partition...

[................................<==>....] Duration: 00:01:58

Found valid partition to 3 contexts, Performance improved by 20.7%

[info] Searching for a better partition...

[.......<==>.............................] Duration: 00:01:00

Found valid partition to 3 contexts, Performance improved by 1.8%

[info] Searching for a better partition...

[.<==>...................................] Duration: 00:00:38

Found valid partition to 3 contexts, Performance improved by 9.9%

[info] Searching for a better partition...

[...............................<==>.....] Duration: 00:00:19

Found valid partition to 3 contexts, Performance improved by 2.7%

This is logs for compiling for hailo8

[info] ClientRunner initialized successfully.

[info] Loading network parameters

[info] Starting Hailo allocation and compilation flow

[info] Adding an output layer after conv46

[info] Adding an output layer after conv47

[info] Adding an output layer after conv56

[info] Adding an output layer after conv57

[info] Building optimization options for network layers...

[info] Successfully built optimization options - 3s 338ms

[info] Trying to compile the network in a single context

[info] Running Auto-Merger

[info] Auto-Merger is done

[info] Running Auto-Merger

[info] Auto-Merger is done

[info] Running Auto-Merger

[info] Auto-Merger is done

[info] Running Auto-Merger

[info] Auto-Merger is done

[info] Running Auto-Merger

[info] Auto-Merger is done

[info] Using Single-context flow

[info] Resources optimization params: max_control_utilization=120%, max_compute_utilization=100%, max_compute_16bit_utilization=100%, max_memory_utilization (weights)=90%, max_input_aligner_utilization=100%, max_apu_utilization=100%

[info] Validating layers feasibility

Validating best_context_0 layer by layer (100%)

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

● Finished

[info] Layers feasibility validated successfully

[info] Running resources allocation (mapping) flow, time per context: 9m 59s

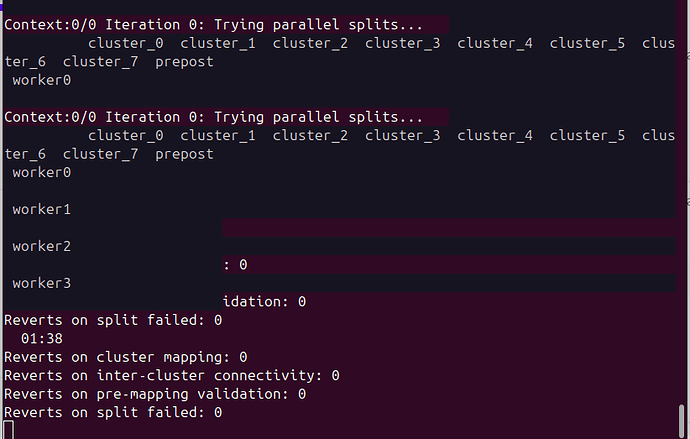

Context:0/0 Iteration 0: Mapping prepost...

cluster_0 cluster_1 cluster_2 cluster_3 cluster_4 cluster_5 cluster_6 cluster_7 prepost

worker0 * * * * * * * * V

Context:0/0 Iteration 0: Mapping prepost...

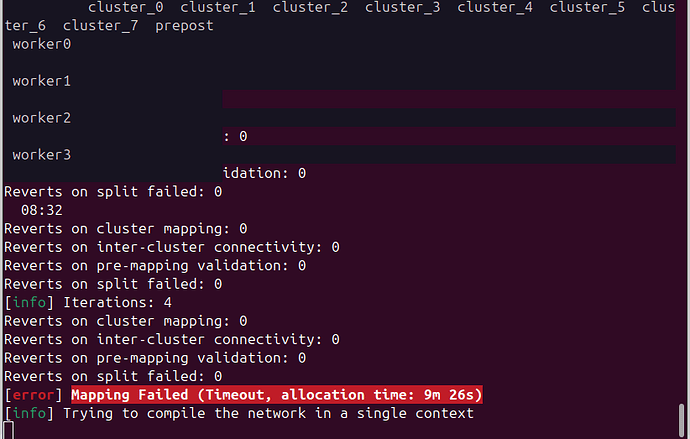

Main difference in Single-cintext and Multi-context.

What I found out that single context never working in my current compilation.

I made some changes in code optimize_model.py script by adding block

alls = """

....

context_switch_param(mode=enabled)

...

"""

This ensure that hailo8 compilation would use multi-context. It compiled despite lot of Trying parallel splits red sectors.

With such logs:

[info] ClientRunner initialized successfully.

[info] Loading network parameters

[info] Starting Hailo allocation and compilation flow

[info] Adding an output layer after conv46

[info] Adding an output layer after conv47

[info] Adding an output layer after conv56

[info] Adding an output layer after conv57

[info] Building optimization options for network layers...

[info] Successfully built optimization options - 6s 597ms

[info] Building optimization options for network layers...

[info] Successfully built optimization options - 6s 508ms

[info] Using Multi-context flow

[info] Resources optimization params: max_control_utilization=100%, max_compute_utilization=100%, max_compute_16bit_utilization=100%, max_memory_utilization (weights)=85%, max_input_aligner_utilization=100%, max_apu_utilization=100%

[info] Finding the best partition to contexts...

[info] Running Auto-Merger...............] Elapsed: 00:01:30

[info] Auto-Merger is done...............] Elapsed: 00:01:37

[...........................<==>.........] Duration: 00:27:07

Found valid partition to 3 contexts

[info] Searching for a better partition...

[.<==>...................................] Duration: 00:01:46

Found valid partition to 3 contexts, Performance improved by 2.1%

[info] Searching for a better partition...

[...........................<==>.........] Elapsed: 00:16:25

[info] Partition to contexts finished successfully

[info] Partitioner finished after 170 iterations, Time it took: 45m 21s 153ms

[info] Applying selected partition to 3 contexts...

[info] Validating layers feasibility

Validating best_context_0 layer by layer (100%)

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

● Finished

Validating best_context_1 layer by layer (100%)

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

+ + + + + + + + + + + + + + + + + + + +

● Finished

Validating best_context_2 layer by layer (1%)

Validating best_context_2 layer by layer (3%)

Validating best_context_2 layer by layer (4%) + + + + +

Validating best_context_2 layer by layer (6%) + + + + +

Validating best_context_2 layer by layer (7%) + + + + +

Validating best_context_2 layer by layer (9%) + + + + +

Validating best_context_2 layer by layer (10%)+ + + + +

But…

While running two same scripts by trying detecting object on camera stream showing same picture on monitor, detection function time that it spend to run model inference almost same, hailo8l model inference even fast for 0.01 s. Like 0.38-39 to 0.37-0.38.

Just in case @shashi I am using degerium sdk for that.

So, hailo8 compilation in single-context never good (always timeout as on screenshots in previous post reply), but with multi-context it not faster than inference on hailo8l model.

Community or support, pls help!

Fixed^^

I removed from optimization step script

performance_param(compiler_optimization_level=max)

So it uses default, it takes little bit more time for optimization, but compiler used Single-context was super fast. Almost rapidly model compiled.

I got 0.21-0.22 inference time. So, hailo8 2time faster than hailo8l with Multi-context.

I think I need a try to explicitly set Single-context for hailo8l arch compilation.