Hi, I am trying to do most basic things with Hailo Dataflow Compiler, such as converting ONNX to HAR, and doing quantization step. I probably do not understand some steps that I need to perform to run simple network on Hailo.

Does Dataflow Compiler understand what nn.Embedding (from PyTorch) or Gather operation is?

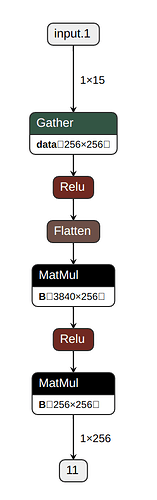

Here is the model visualized with Netron:

Here is a simple script I am trying to work with:

import torch.nn as nn

import torch.utils.data

import hailo_sdk_client

from hailo_sdk_client import ClientRunner

print(f'Hailo Dataflow Compiler v{hailo_sdk_client.__version__}')

batch_size = 1

input_len = 15

vocab_len = 256 # UTF-8 characters

embedding_len = 256

torch.manual_seed(0)

model = nn.Sequential(

nn.Embedding(vocab_len, embedding_len),

nn.ReLU(),

nn.Flatten(),

nn.Linear(input_len * embedding_len, 256, bias=False),

nn.ReLU(),

nn.Linear(256, vocab_len, bias=False),

)

output = model(torch.zeros(batch_size, input_len, dtype=torch.long))

print(f"{output.mean()=}, {output.std(unbiased=False)=}, {output.shape=}")

with torch.no_grad():

dummy_input = torch.randint(vocab_len, (batch_size, input_len))

# torch.onnx.export(model, dummy_input, "model.onnx", verbose=True, input_names=["input"], output_names=["output"])

torch.onnx.export(model, dummy_input, "model.onnx", verbose=True)

# chosen_hw_arch = "hailo8"

# chosen_hw_arch = "hailo15h" # For Hailo-15 devices

chosen_hw_arch = "hailo8r" # For Mini PCIe modules or Hailo-8R devices

runner = ClientRunner(hw_arch=chosen_hw_arch)

hn, npz = runner.translate_onnx_model(

"model.onnx",

"network",

start_node_names=["/0/Gather"],

end_node_names=["/5/MatMul"],

net_input_shapes={"/0/Gather": [batch_size, input_len]},

)

runner.save_har("model.har")

runner.optimize(None)

hef = runner.compile()

file_name = f"model.hef"

with open(file_name, "wb") as f:

f.write(hef)

Output:

Hailo Dataflow Compiler v3.28.0

output.mean()=tensor(-0.0118, grad_fn=<MeanBackward0>), output.std(unbiased=False)=tensor(0.1509, grad_fn=<StdBackward0>), output.shape=torch.Size([1, 256])

Exported graph: graph(%input.1 : Long(1, 15, strides=[15, 1], requires_grad=0, device=cpu),

%0.weight : Float(256, 256, strides=[256, 1], requires_grad=1, device=cpu),

%onnx::MatMul_12 : Float(3840, 256, strides=[1, 3840], requires_grad=0, device=cpu),

%onnx::MatMul_13 : Float(256, 256, strides=[1, 256], requires_grad=0, device=cpu)):

%/0/Gather_output_0 : Float(1, 15, 256, strides=[3840, 256, 1], requires_grad=0, device=cpu) = onnx::Gather[onnx_name="/0/Gather"](%0.weight, %input.1), scope: torch.nn.modules.container.Sequential::/torch.nn.modules.sparse.Embedding::0 # /fedora/p/i/qubu/.venv/lib/python3.10/site-packages/torch/nn/functional.py:2267:0

%/1/Relu_output_0 : Float(1, 15, 256, strides=[3840, 256, 1], requires_grad=0, device=cpu) = onnx::Relu[onnx_name="/1/Relu"](%/0/Gather_output_0), scope: torch.nn.modules.container.Sequential::/torch.nn.modules.activation.ReLU::1 # /fedora/p/i/qubu/.venv/lib/python3.10/site-packages/torch/nn/functional.py:1500:0

%/2/Flatten_output_0 : Float(1, 3840, strides=[3840, 1], requires_grad=0, device=cpu) = onnx::Flatten[axis=1, onnx_name="/2/Flatten"](%/1/Relu_output_0), scope: torch.nn.modules.container.Sequential::/torch.nn.modules.flatten.Flatten::2 # /fedora/p/i/qubu/.venv/lib/python3.10/site-packages/torch/nn/modules/flatten.py:50:0

%/3/MatMul_output_0 : Float(1, 256, strides=[256, 1], requires_grad=0, device=cpu) = onnx::MatMul[onnx_name="/3/MatMul"](%/2/Flatten_output_0, %onnx::MatMul_12), scope: torch.nn.modules.container.Sequential::/torch.nn.modules.linear.Linear::3 # /fedora/p/i/qubu/.venv/lib/python3.10/site-packages/torch/nn/modules/linear.py:117:0

%/4/Relu_output_0 : Float(1, 256, strides=[256, 1], requires_grad=0, device=cpu) = onnx::Relu[onnx_name="/4/Relu"](%/3/MatMul_output_0), scope: torch.nn.modules.container.Sequential::/torch.nn.modules.activation.ReLU::4 # /fedora/p/i/qubu/.venv/lib/python3.10/site-packages/torch/nn/functional.py:1500:0

%11 : Float(1, 256, strides=[256, 1], requires_grad=0, device=cpu) = onnx::MatMul[onnx_name="/5/MatMul"](%/4/Relu_output_0, %onnx::MatMul_13), scope: torch.nn.modules.container.Sequential::/torch.nn.modules.linear.Linear::5 # /fedora/p/i/qubu/.venv/lib/python3.10/site-packages/torch/nn/modules/linear.py:117:0

return (%11)

[info] Translation started on ONNX model network

[info] Restored ONNX model network (completion time: 00:00:00.03)

[info] Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.11)

Traceback (most recent call last):

File "/fedora/p/i/qubu/main_converter.py", line 35, in <module>

hn, npz = runner.translate_onnx_model(

File "/fedora/p/i/qubu/.venv/lib/python3.10/site-packages/hailo_sdk_common/states/states.py", line 16, in wrapped_func

return func(self, *args, **kwargs)

File "/fedora/p/i/qubu/.venv/lib/python3.10/site-packages/hailo_sdk_client/runner/client_runner.py", line 1158, in translate_onnx_model

parser.translate_onnx_model(

File "/fedora/p/i/qubu/.venv/lib/python3.10/site-packages/hailo_sdk_client/sdk_backend/parser/parser.py", line 209, in translate_onnx_model

set_model_net_input_shapes(onnx_model, net_input_shapes)

File "/fedora/p/i/qubu/.venv/lib/python3.10/site-packages/hailo_sdk_common/onnx_tools/onnx_shape_inference.py", line 202, in set_model_net_input_shapes

raise UnsupportedGraphInputError(

hailo_sdk_common.onnx_tools.onnx_shape_inference.UnsupportedGraphInputError: Couldn't find predecessors for node /0/Gather in the given model.

Thank you.