Hi guys

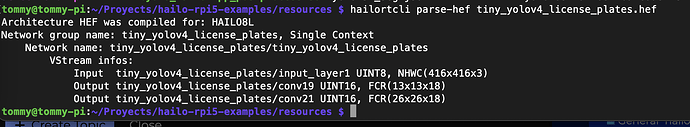

I I retrained a tiny_yolov4_licence_plates with a custom data-set due that one already compiled was for hailo-8 and I have a hailo-8l, I was able to follow all the guide shared in this guide

docker build --build-arg timezone=cat /etc/timezone -t license_plate_detection:v0 .

docker run --name “lpd” -it --gpus all --ipc=host -v /home/tommy/halio_suite/shared_with_docker:/workspace/shared license_plate_detection:v0

./darknet detector train data/obj.data ./cfg/tiny_yolov4_license_plates.cfg tiny_yolov4_license_plates.weights -map -clear

I edited previously files

./cfg/tiny_yolov4_license_plates.cfg:

root@10b08d11ca89:/workspace/darknet# more ./cfg/tiny_yolov4_license_plates.cfg

[net]

# Testing

#batch=1

#subdivisions=1

# Training

batch=64

subdivisions=32

width=416

height=416

channels=3

momentum=0.9

decay=0.0005

angle=0

saturation = 1.5

exposure = 1.5

hue=.1

learning_rate=0.00261

burn_in=1000

max_batches = 2000

policy=steps

steps=3600,3800

scales=.1,.1

[convolutional]

batch_normalize=1

filters=32

size=3

stride=2

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=64

size=3

stride=2

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=64

size=3

stride=1

pad=1

activation=leaky

[route]

layers=-1

groups=2

group_id=1

[convolutional]

batch_normalize=1

filters=32

size=3

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=32

size=3

stride=1

pad=1

activation=leaky

[route]

layers = -1,-2

[convolutional]

batch_normalize=1

filters=64

size=1

stride=1

pad=1

activation=leaky

[route]

layers = -6,-1

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=leaky

[route]

layers=-1

groups=2

group_id=1

[convolutional]

batch_normalize=1

filters=64

size=3

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=64

size=3

stride=1

pad=1

activation=leaky

[route]

layers = -1,-2

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=leaky

[route]

layers = -6,-1

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=leaky

[route]

layers=-1

groups=2

group_id=1

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=128

size=3

stride=1

pad=1

activation=leaky

[route]

layers = -1,-2

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[route]

layers = -6,-1

[maxpool]

size=2

stride=2

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=leaky

##################################

[convolutional]

batch_normalize=1

filters=256

size=1

stride=1

pad=1

activation=leaky

[convolutional]

batch_normalize=1

filters=512

size=3

stride=1

pad=1

activation=leaky

[convolutional]

size=1

stride=1

pad=1

filters=18

activation=linear

[yolo]

mask = 3,4,5

anchors = 10,14, 23,27, 37,58, 81,82, 135,169, 344,319

classes=1

num=6

jitter=.3

scale_x_y = 1.05

cls_normalizer=1.0

iou_normalizer=0.07

iou_loss=ciou

ignore_thresh = .7

truth_thresh = 1

random=0

resize=1.5

nms_kind=greedynms

beta_nms=0.6

[route]

layers = -4

[convolutional]

batch_normalize=1

filters=128

size=1

stride=1

pad=1

activation=leaky

[upsample]

stride=2

[route]

layers = -1, 23

[convolutional]

batch_normalize=1

filters=256

size=3

stride=1

pad=1

activation=leaky

[convolutional]

size=1

stride=1

pad=1

filters=18

activation=linear

[yolo]

mask = 0,1,2

anchors = 10,14, 23,27, 37,58, 81,82, 135,169, 344,319

classes=1

num=6

jitter=.3

scale_x_y = 1.05

cls_normalizer=1.0

iou_normalizer=0.07

iou_loss=ciou

ignore_thresh = .7

truth_thresh = 1

random=0

resize=1.5

nms_kind=greedynms

beta_nms=0.6

where I changes batch, subdiv, steps mainly

obj/obj.data

root@10b08d11ca89:/workspace/darknet# more data/obj.data

classes = 1

train = /workspace/shared/licence-darknet/train.txt

valid = /workspace/shared/licence-darknet/valid.txt

names = data/obj.names

backup = backup/

train.txt and valid.txt it’s the path of the files, something like this

/workspace/shared/licence-darknet/train/00009e5b390986a0_jpg.rf.04d1cbabbde68b6be73ada81c47f3528.jpg

/workspace/shared/licence-darknet/train/00009e5b390986a0_jpg.rf.134d6373e0bde30fc8b9747bc1232667.jpg

/workspace/shared/licence-darknet/train/00009e5b390986a0_jpg.rf.e2f9df03aa702fad603db72632cae9cc.jpg

then I ran this

python …/pytorch-YOLOv4/demo_darknet2onnx.py cfg/tiny_yolov4_license_plates.cfg backup/tiny_yolov4_license_plates_last.weights /workspace/shared/licence-darknet/valid/xemayBigPlate93_jpg.rf.27a32023ca82a9b3685fd6e3833284fe.jpg 1

finally, compilation

hailomz compile --ckpt /local/shared_with_docker/tiny_yolov4_license_plates_1_416_416.onnx --calib-path /local/shared_with_docker/licence-darknet/valid/ --yaml hailo_model_zoo/cfg/networks/tiny_yolov4_license_plates.yaml --hw-arch hailo8lhailo_model_zoo/cfg/networks/tiny_yolov4_license_plates.yaml

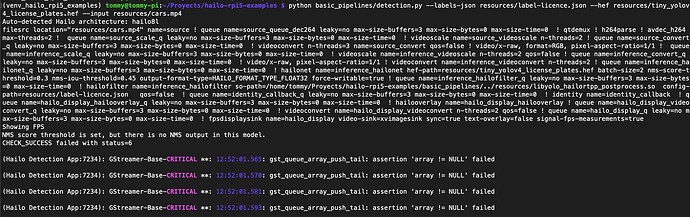

but when I want to run it in the basic_pipeline detection as the example of barcode im getting some issues, I’m not sure if Im doing it right, but some help it will be good

thanks guys.

NMS score threshold is set, but there is no NMS output in this model.

(Hailo Detection App:7234): GStreamer-Base-CRITICAL **: 12:52:01.565: gst_queue_array_push_tail: assertion ‘array != NULL’ failed