im actually working on oriented bounding box instead of axis aligned bounding box is there any model developed on hailo model zoo

Hey @SAN

Our Model Zoo doesn’t currently include models specifically designed for OBB detection out of the box. Most of our object detection models, including our YOLO variants, use Axis-Aligned Bounding Boxes (AABB).

However, it’s entirely possible to adapt existing models like YOLOv5 or YOLOv8 to support OBB. Here’s a brief overview of how you could approach this:

- Model Adjustments: Modify the final layer of the detection model to output 5 parameters: (x_center, y_center, width, height, angle). The angle will define the rotation of the bounding box.

- Post-Processing: Implement Rotated Non-Maximum Suppression (Rotated NMS) for post-processing. This will handle the angle as part of the box definition.

You can still use our Hailo SDK and Dataflow Compiler for quantization and deployment on Hailo hardware. The custom OBB handling can be integrated using HailoRT-pp or through direct modifications to the neural network output.

If you need more detailed guidance on modifying a specific model from our Model Zoo to support OBB, please let me know. I’d be happy to provide more in-depth assistance.

thank for the information. i have actually done the model adjustment part, right now during the onnx translation after some research what i did was get the head layer of my custom model and go for the further process, right now the problem is i need some guidance on the hailoRT-pp and the quantization process. in that case can you provide me the yolov8n model building process or the PP code. Thanks for the further response

Here is a detailed explanation on my custom model. im using a yolov8n-obb.pt model and as i found that hailo use the 6 convolution layers directly from the head so i have already output it as well as the other head that the obb layer are output. The thing is im having a trouble on how to postprocess this part. So if i can get the yolov8n hailort-pp step it will be usefull. Thanks

Hey @SAN,

Great to hear that you’ve made progress with adjusting the YOLOv8n-OBB model!

For the post-processing (HailoRT-PP) and quantization steps:

-

Post-Processing for OBB:

Since you’re dealing with oriented bounding boxes (OBBs), you’ll need to implement Rotated Non-Maximum Suppression (Rotated NMS) in the post-processing step. The default HailoRT-PP for YOLO models works with Axis-Aligned Bounding Boxes (AABB), so this is where you’ll need customization. You can adapt the post-processing to handle the(x_center, y_center, width, height, angle)format you’re working with.You can reference the standard YOLOv8 post-processing configuration in the Hailo Model Zoo and modify it to include the additional angle parameter for OBB handling. Customizing the NMS to take the rotation into account is key here.

-

Quantization:

For quantization, make sure to provide calibration images that match your OBB format during the compilation process. You can use HailoRT’s quantization options, similar to how it’s done for YOLOv8 models in the Hailo Model Zoo. Focus on ensuring that theqp_scaleandqp_zpparameters are set correctly in the model’s YAML configuration. -

Model Compilation:

If you’re using the Hailo Model Zoo, you can follow the standard YOLOv8 compilation process and adjust the output head to accommodate the OBB-specific parameters. Make sure to modify both the post-processing step (to handle the angle) and the quantization configurations.

Next Steps:

- Adjust your post-processing logic to support Rotated NMS.

- Compile the model with the Dataflow Compiler and ensure proper quantization.

- Modify the YAML configuration to handle the new output format, including the angle for the OBBs.

Let me know if you need more detailed guidance on the configuration or if you’d like some example code to help with the post-processing changes!

Regards

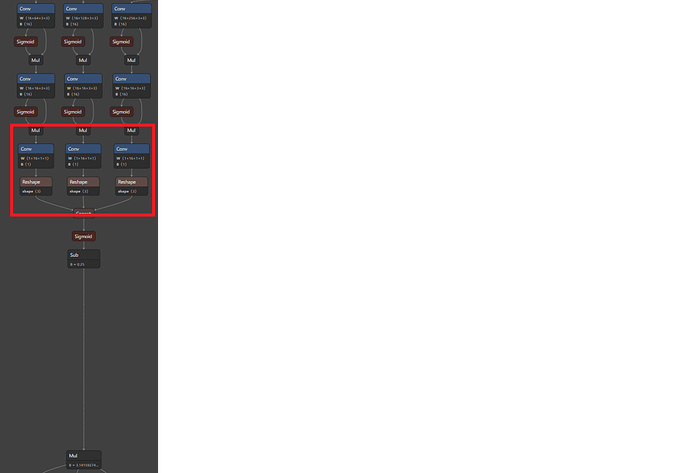

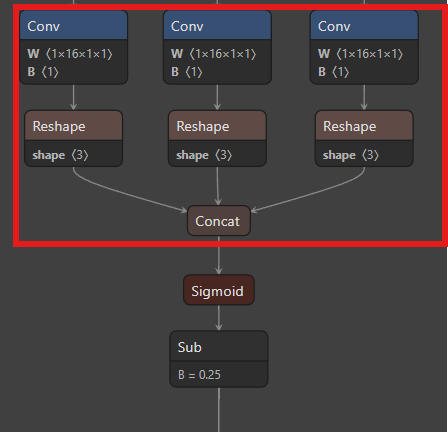

Hi, just for clarification, for yolov8n hailo used 6 end_node before the detect layer model22 then postprocess the output. FOR yolov8n-obb there is 9 end_node can be selected and postprocess it. let me make it simple in my case i take only the 3 conv end-node which is to get the angle as the image below shows: ONNX

The question is when i run both .ONNX and .HEF just to get the angle i should get the same output, right? Even after the optimizing the .HAR file there could be some change in the output but it will be slightly right not a dramatic different. I optimize the .HAR with resizing the dataset only this will be my code

images_path = “…/data/overlap”

images_list = [img_name for img_name in os.listdir(images_path) if os.path.splitext(img_name)[1] == “.jpg”]

calib_dataset = np.zeros((len(images_list), 640, 640, 3))

for idx, img_name in enumerate(sorted(images_list)):

img = Image.open(os.path.join(images_path, img_name))

img = img.resize((640, 640)) # Resize the image to 640x640

img = np.array(img)

calib_dataset[idx, :, :, :] = img

np.save(“calib_set.npy”, calib_dataset)

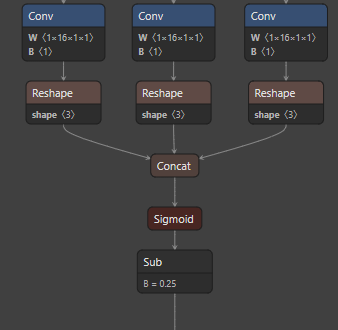

*For your information i already postprocess the output from the 3 node which is a simple step reshape-> concat → sigmoid → subtract(0.25) → multiply(PI) → angel

I also want to test the obb yolov8n obb model. But there is no default cfg yaml,alls and post processing files for obb in model-zoo. It would be great if you can share resouces. Or if you can explain. I will try.

Hi @saurabh as i know there is a model called yolov8n_obb from ultralytics which what they do for the detection is similar with the yolov8n structure the only difference is where at last detection layer will be process with angel detection. This is the basic structure of the model. Right what we have to do is based on my understanding:

-

Choose the end node based on how you will postprocess it. In my case what i did was i folloe HAILO mz where hailo choose 6 conv node as their end node then postprocess it For us i will choose additional 3 conv node which means i will be having 9 end node. This is the 3 conv end node for angle

-

For the postprocessing part we need to apply RNMS in which you can add on the runner But for testing what i did was just run the hailo converted model .HEF on hailo, Get the raw output then post process it on my cpu. By this you can make sure that the model raw output is correct with the default model(.pt , .onnx)

runner.load_model_script(alls)

For the optimization what i did was simple resizing to 640 x 640 and optimize with data calibration dataset this is the code:

images_path = “../data/overlap”

images_list = [img_name for img_name in os.listdir(images_path) if os.path.splitext(img_name)[1] == “.jpg”]

calib_dataset = np.zeros((len(images_list), 640, 640, 3))

for idx, img_name in enumerate(sorted(images_list)):

img = Image.open(os.path.join(images_path, img_name))

img = img.resize((640, 640)) # Resize the image to 640x640

img = np.array(img)

calib_dataset[idx, :, :, :] = img

np.save(“calib_set.npy”, calib_dataset)

model_name = “best”

hailo_model_har_name = f"{model_name}_hailo_model9.har"

assert os.path.isfile(hailo_model_har_name), “Please provide valid path for HAR file”

runner = ClientRunner(har=hailo_model_har_name)

runner.optimize(calib_dataset)

Save the result state to a Quantized HAR file

quantized_model_har_path = f"{model_name}_quantized_model.har"

runner.save_har(quantized_model_har_path)

Problem:

i manage to optimize it then converted to .har → .hef but the output im get from the convolution is not same with the ultralytics model. Even if my quantization is poor also the output should be only have some slight changes only but in my case its not even similar

If you manage to work on it, Please do share some idea on it @saurabh Thanks in advance

@SAN

Thank you so much for the detailedexplanation, this is going to help a lot. I will try.

@SAN

I am able to create the hef for obb. But I am unable to test the result because of post processing.

Have you written post processing part? I am unable to build the post processing part for the outputs. I am unsure how to start, and where to find relevant information.

It would be great if you can share.

Thank you.

okkay yep sure maybe i know what is youre end node and how did you optimized it can you share it because for the post processing im still testing by using some code from ultralytics which you can get from the head module. But Without the end node there could be lots of way to post processing the raw output

Hi @saurabh i have post a new topic on a problem im facing with my pre processing code maybe it might helpfull for you .har vs .hef

@SAN

Yes probably same 9 nodes. (3for angle). I used the pose alls script(ends nodes are same so). And I am using DOTA dataset to optimize quantization.

I also looked ultralytics head file, to understand what exactly written here.

Probably this what we need

I am new to ml. And still alot of things i need to study. So, for me it seems little difficult to proceed. Although i will try. I

So, in case you can share it would be great and very helpful.

ohh that nice okay lemme give my idea on how i test it. for the preprocessing yes youre true ultralytics got some code which is in depth but i do have the pre processeing code in the google colab i gave you. The onnx code is on the google colab last section.

let me give some idea. what you see the angle = torch.cat … code that is this part

as i saw the angle code was quite easy to post process since it is sigmoid → sub(-0.25) → * pi then you will get the angle by this you can confirm the output angle data with the .pt/.onnx model

but the problem im facing is the .har file im able to get correct output as i test with the 3 conv node but when i convert to .hef im getting 2 issues where:

-

different input buffer but the same is correct which doesn’t make sense

-

even i make the input buffer size same aswell as the .har file still the out are note same at all.

hope you could get solution @saurabh

@SAN

Thank you so much. I will try.

Just for confirmation –

I can use this code from pose-process to extract boxes…

And I will have to calculate angle separately. And process the boxes and angle using

Not sure if it is correct. May be not because inputs are different. But I think the approach should be very similar?

@SAN

I have one more question…

How can we test using har file? I am not sure about it?

I have compiler setup on ubuntu and hailo connected to pi.

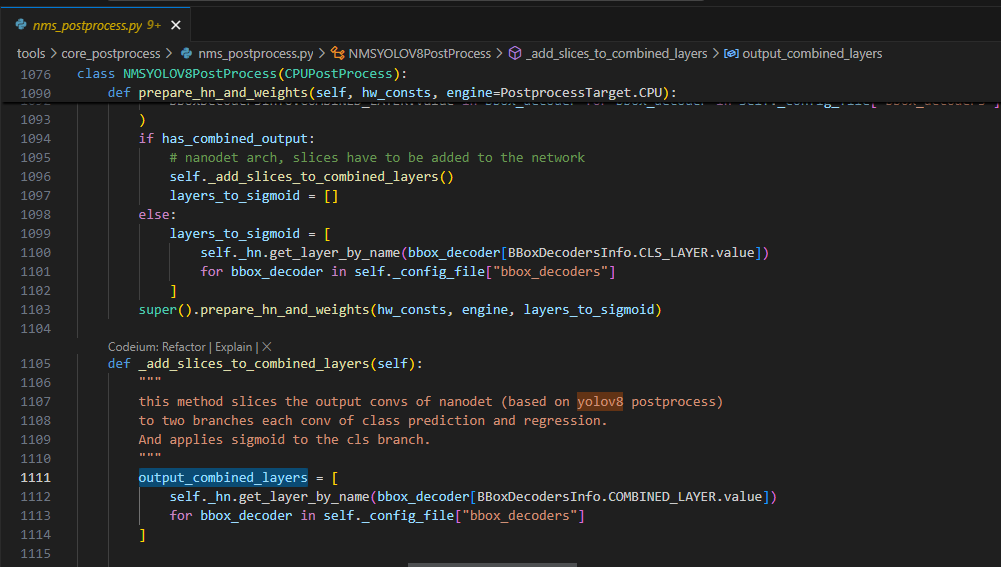

as i see as i know some of the preprocessing/postprocessing is on the hailo sdk client you can see this when you start the Hailo AI Software Suite Container environment the code are in there. Hailo sdk. for example let me show you the part they postprocessing for yolov8n

this is the script i get from the hailo_sdk_client you ca get form the docker container inside and get some idea on it