Hi all,

I have a Pi5 with AI HAT+ and I use PI OS Lite for my project. I’m pretty new to all this and need a bit help.

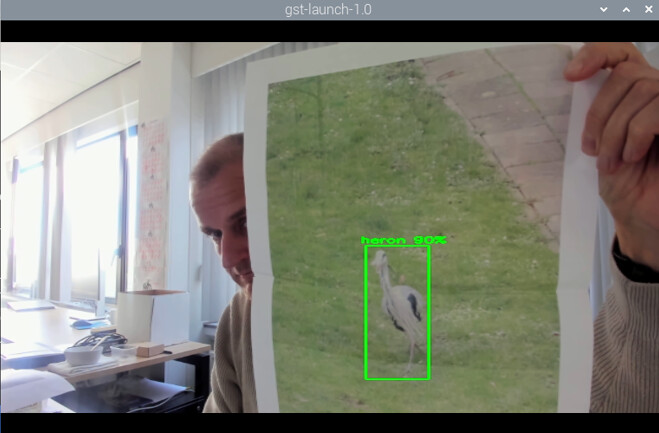

The goal of my project is detecting ‘herons’ who come visit my pond and in the end I want to scare them away. I’m using a simple Reolink camera and Frigate with a custom trained model in order to detect them.

All seems to be working fine as Frigate starts, I see my camera feed and no errors or warnings are mentioned in the Frigate logs.

After a while, when a motion is send to the HAILO8L chip for processing (Green box around object in debug view Frigate), Frigate suddenly stops and the watchdogs restarts Frigate. Without motion, the system remains stable. When detection is processed again the reboot happens again, and again, …

Below the last part of my Frigate log when things start to go wrong:

2025-10-20 19:20:04.039774449 [2025-10-20 19:20:04] frigate.api.fastapi_app INFO : FastAPI started

2025-10-20 19:20:11.052746746 Process detector:hailo:

2025-10-20 19:20:11.052753394 Traceback (most recent call last):

2025-10-20 19:20:11.052755097 File “/usr/lib/python3.11/multiprocessing/process.py”, line 314, in _bootstrap

2025-10-20 19:20:11.052756190 self.run()

2025-10-20 19:20:11.052757449 File “/opt/frigate/frigate/util/process.py”, line 41, in run_wrapper

2025-10-20 19:20:11.052758338 return run(*args, **kwargs)

2025-10-20 19:20:11.052759283 ^^^^^^^^^^^^^^^^^^^^

2025-10-20 19:20:11.052760394 File “/usr/lib/python3.11/multiprocessing/process.py”, line 108, in run

2025-10-20 19:20:11.052761412 self._target(*self._args, **self._kwargs)

2025-10-20 19:20:11.052762949 File “/opt/frigate/frigate/object_detection/base.py”, line 136, in run_detector

2025-10-20 19:20:11.052764005 detections = object_detector.detect_raw(input_frame) 2025-10-20 19:20:11.052765079 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-10-20 19:20:11.052766283 File “/opt/frigate/frigate/object_detection/base.py”, line 86, in detect_raw

2025-10-20 19:20:11.052767375 return self.detect_api.detect_raw(tensor_input=tensor_input)

2025-10-20 19:20:11.052771301 ^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^^

2025-10-20 19:20:11.052772486 File “/opt/frigate/frigate/detectors/plugins/hailo8l.py”, line 370, in detect_raw

2025-10-20 19:20:11.052774968 if det.shape[0] < 5:

2025-10-20 19:20:11.052775690 ~~~~~~~~~^^^

2025-10-20 19:20:11.052776523 IndexError: tuple index out of range

I’m using docker-compose to run Frigate and the Frigate part in my docker-compose.yml looks like this:

frigate:

container_name: frigate

image: ghcr.io/blakeblackshear/frigate:stable

restart: unless-stopped

privileged: true

shm_size: “512mb”

devices:

- /dev/hailo0:/dev/hailo0

volumes:

- ./frigate/config:/config

- ./frigate/media:/media

- /etc/localtime:/etc/localtime:ro

ports:

- “5000:5000”

- “8554:8554”

- “8555:8555/tcp”

- “8555:8555/udp”

environment:

- TZ=Europe/Brussels

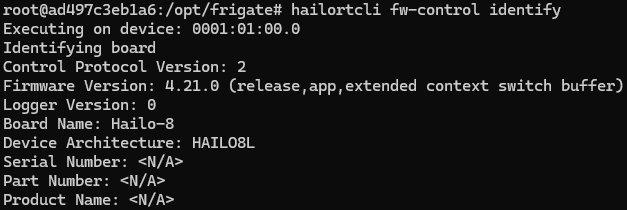

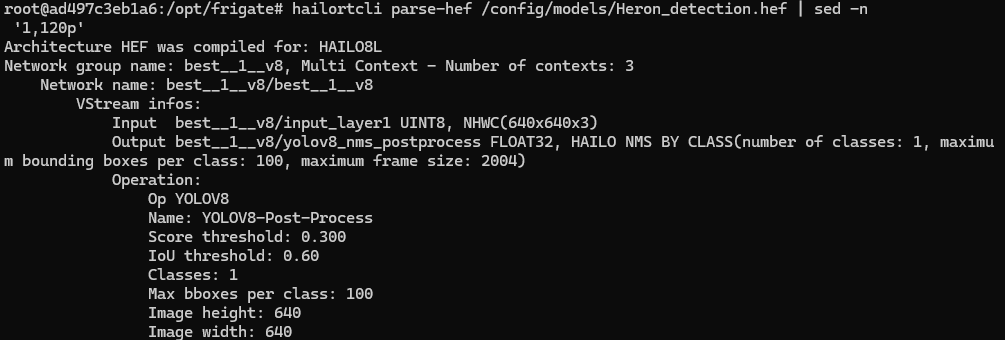

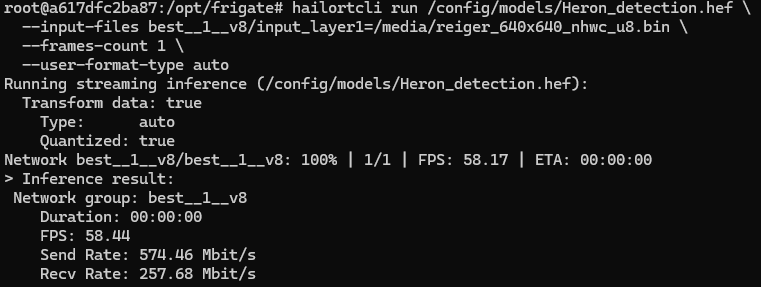

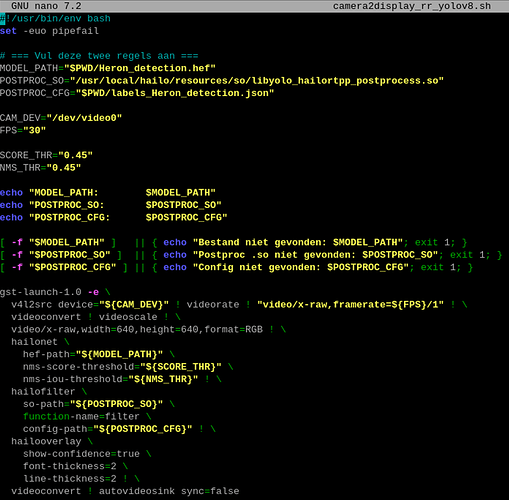

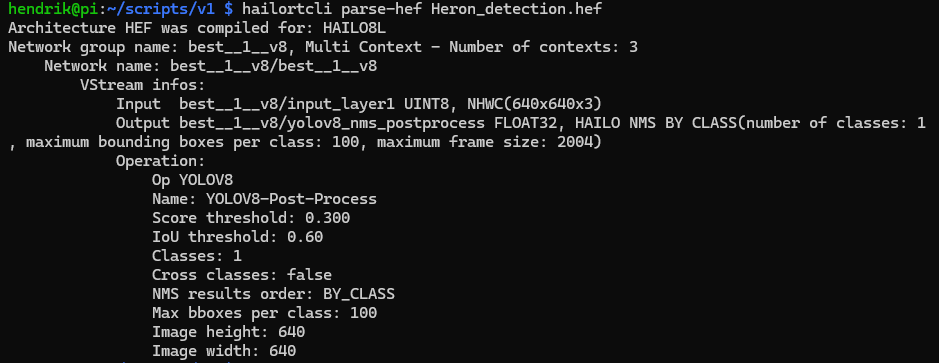

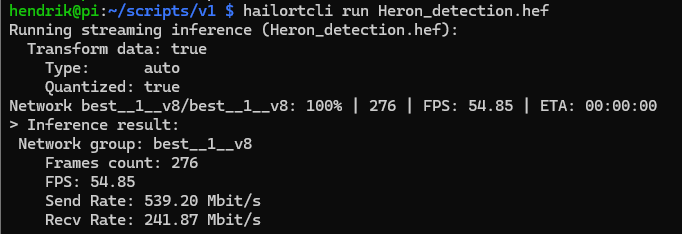

Below some info about my custom model

(initially trained in colab: !yolo task=detect mode=train model=yolov8s.pt data=/content/datasets/maker-nano-2/data.yaml epochs=100 batch=16 imgsz=640 plots=True –> afterwards compiled and quantized)

{

“ConfigVersion”: 11,

“Checksum”: “638865bab42d64cfb46af36e36ceb761ea4cb0437384badeb60c77efea4b3cd8”,

“DEVICE”: [

{

“DeviceType”: “HAILO8L”,

“RuntimeAgent”: “HAILORT”,

“SupportedDeviceTypes”: “HAILORT/HAILO8L, HAILORT/HAILO8”,

“EagerBatchSize”: 1

}

],

“PRE_PROCESS”: [

{

“InputN”: 1,

“InputH”: 640,

“InputW”: 640,

“InputC”: 3,

“InputQuantEn”: true

}

],

“MODEL_PARAMETERS”: [

{

“ModelPath”: “Heron_detection–640x640_quant_hailort_multidevice_1.hef”

}

],

“POST_PROCESS”: [

{

“OutputPostprocessType”: “DetectionYoloHailo”,

“OutputNumClasses”: 1,

“LabelsPath”: “labels_Heron_detection.json”

}

]

}

My frigate.yml:

mqtt:

host: 192.168.0.100

port: 1883

topic_prefix: frigate

user: hendrik

password: xxxxxxxxx

detectors:

hailo:

type: hailo8l

device: PCIe

model:

path: /config/models/Heron_detection.hef

labelmap_path: /config/labelmap/heron.txt

input_pixel_format: rgb

width: 640

height: 640

input_tensor: nhw

input_dtype: int

model_type: yolo-generic

database:

path: /config/frigate.db

logger:

default: info

logs:

frigate.detectors.hailo: debug

frigate.object_detection: debug

birdseye:

enabled: false

objects:

track:

- heron

ffmpeg:

hwaccel_args: preset-rpi-64-h264

go2rtc:

streams:

reolink_main:

- rtsp://admin:piwsij-9zetsU-peqnap@192.168.0.154/h265Preview_01_main

reolink_sub:

- rtsp://admin:piwsij-9zetsU-peqnap@192.168.0.154/h264Preview_01_sub

cameras:

reiger_cam:

detect:

enabled: true

width: 640

height: 640

fps: 3

ffmpeg:

inputs:

- path: rtsp://localhost:8554/reolink_sub

roles:

- detect

- path: rtsp://localhost:8554/reolink_main

roles:

- record

snapshots:

enabled: true

bounding_box: true

crop: false

retain:

default: 10

objects:

track:

- heron

filters:

heron:

min_score: 0.15

threshold: 0.25

min_area: 50

max_area: 999999

motion:

threshold: 30

contour_area: 10

improve_contrast: true

version: 0.16-0

Any help would be welcome as I’m stuck at this point.

Many thanks in advance.