I have the same setup and same issue as @_Mab

i use the models found on: hailo_model_zoo/docs/public_models/HAILO8/HAILO8_object_detection.rst at master · hailo-ai/hailo_model_zoo · GitHub

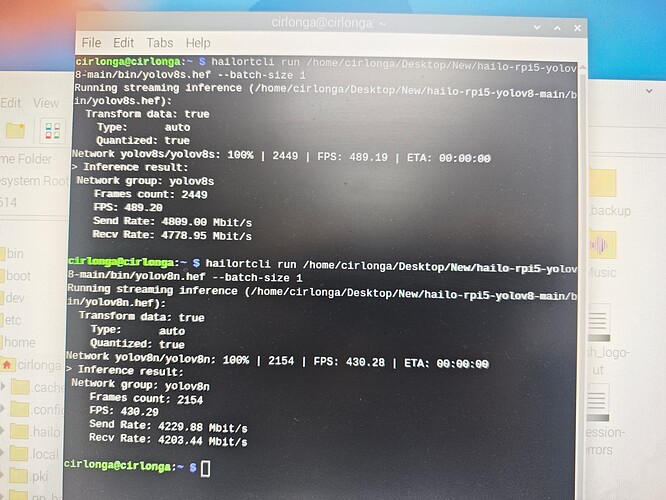

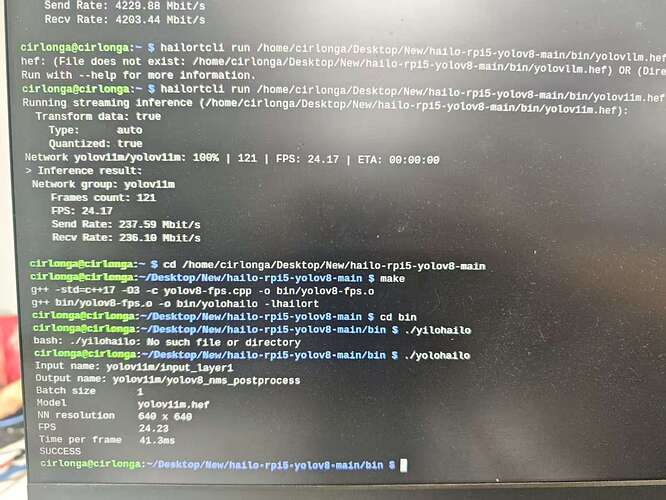

hailortcli run resources/yolov11m.hef --batch-size 1

Running streaming inference (resources/yolov11m.hef):

Transform data: true

Type: auto

Quantized: true

Network yolov11m/yolov11m: 100% | 121 | FPS: 24.17 | ETA: 00:00:00

> Inference result:

Network group: yolov11m

Frames count: 121

FPS: 24.17

Send Rate: 237.59 Mbit/s

Recv Rate: 236.11 Mbit/s

So for yolov11m i would expect 50FPS, but get only half.

I enabled PCI gen3, which had zero effect.

lspci -vv:

0001:01:00.0 Co-processor: Hailo Technologies Ltd. Hailo-8 AI Processor (rev 01)

Subsystem: Hailo Technologies Ltd. Hailo-8 AI Processor

Control: I/O- Mem+ BusMaster+ SpecCycle- MemWINV- VGASnoop- ParErr- Stepping- SERR- FastB2B- DisINTx-

Status: Cap+ 66MHz- UDF- FastB2B- ParErr- DEVSEL=fast >TAbort- <TAbort- <MAbort- >SERR- <PERR- INTx-

Latency: 0

Interrupt: pin A routed to IRQ 38

Region 0: Memory at 1800000000 (64-bit, prefetchable) [size=16K]

Region 2: Memory at 1800008000 (64-bit, prefetchable) [size=4K]

Region 4: Memory at 1800004000 (64-bit, prefetchable) [size=16K]

Capabilities: [80] Express (v2) Endpoint, MSI 00

DevCap: MaxPayload 256 bytes, PhantFunc 0, Latency L0s <64ns, L1 unlimited

ExtTag+ AttnBtn- AttnInd- PwrInd- RBE+ FLReset+ SlotPowerLimit 0W

DevCtl: CorrErr+ NonFatalErr+ FatalErr+ UnsupReq+

RlxdOrd+ ExtTag+ PhantFunc- AuxPwr- NoSnoop+ FLReset-

MaxPayload 256 bytes, MaxReadReq 512 bytes

DevSta: CorrErr- NonFatalErr- FatalErr- UnsupReq- AuxPwr- TransPend-

LnkCap: Port #0, Speed 8GT/s, Width x4, ASPM L0s L1, Exit Latency L0s <1us, L1 <2us

ClockPM- Surprise- LLActRep- BwNot- ASPMOptComp+

LnkCtl: ASPM L1 Enabled; RCB 64 bytes, Disabled- CommClk+

ExtSynch- ClockPM- AutWidDis- BWInt- AutBWInt-

LnkSta: Speed 8GT/s, Width x1 (downgraded)

TrErr- Train- SlotClk+ DLActive- BWMgmt- ABWMgmt-

DevCap2: Completion Timeout: Not Supported, TimeoutDis+ NROPrPrP- LTR+

10BitTagComp- 10BitTagReq- OBFF Not Supported, ExtFmt+ EETLPPrefix-

EmergencyPowerReduction Not Supported, EmergencyPowerReductionInit-

FRS- TPHComp- ExtTPHComp-

AtomicOpsCap: 32bit- 64bit- 128bitCAS-

DevCtl2: Completion Timeout: 50us to 50ms, TimeoutDis- LTR+ 10BitTagReq- OBFF Disabled,

AtomicOpsCtl: ReqEn-

LnkCap2: Supported Link Speeds: 2.5-8GT/s, Crosslink- Retimer- 2Retimers- DRS-

LnkCtl2: Target Link Speed: 8GT/s, EnterCompliance- SpeedDis-

Transmit Margin: Normal Operating Range, EnterModifiedCompliance- ComplianceSOS-

Compliance Preset/De-emphasis: -6dB de-emphasis, 0dB preshoot

LnkSta2: Current De-emphasis Level: -6dB, EqualizationComplete+ EqualizationPhase1+

EqualizationPhase2+ EqualizationPhase3+ LinkEqualizationRequest-

Retimer- 2Retimers- CrosslinkRes: unsupported

Capabilities: [e0] MSI: Enable- Count=1/1 Maskable- 64bit+

Address: 0000000000000000 Data: 0000

Capabilities: [f8] Power Management version 3

Flags: PMEClk- DSI- D1- D2- AuxCurrent=0mA PME(D0-,D1-,D2-,D3hot+,D3cold-)

Status: D3 NoSoftRst+ PME-Enable- DSel=0 DScale=1 PME-

Capabilities: [100 v1] Vendor Specific Information: ID=1556 Rev=1 Len=008 <?>

Capabilities: [108 v1] Latency Tolerance Reporting

Max snoop latency: 0ns

Max no snoop latency: 0ns

Capabilities: [110 v1] L1 PM Substates

L1SubCap: PCI-PM_L1.2+ PCI-PM_L1.1+ ASPM_L1.2+ ASPM_L1.1+ L1_PM_Substates+

PortCommonModeRestoreTime=10us PortTPowerOnTime=10us

L1SubCtl1: PCI-PM_L1.2- PCI-PM_L1.1- ASPM_L1.2- ASPM_L1.1-

T_CommonMode=0us LTR1.2_Threshold=0ns

L1SubCtl2: T_PwrOn=10us

Capabilities: [128 v1] Alternative Routing-ID Interpretation (ARI)

ARICap: MFVC- ACS-, Next Function: 0

ARICtl: MFVC- ACS-, Function Group: 0

Capabilities: [200 v2] Advanced Error Reporting

UESta: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UEMsk: DLP- SDES- TLP- FCP- CmpltTO- CmpltAbrt- UnxCmplt- RxOF- MalfTLP- ECRC- UnsupReq- ACSViol-

UESvrt: DLP+ SDES- TLP- FCP+ CmpltTO- CmpltAbrt- UnxCmplt- RxOF+ MalfTLP+ ECRC- UnsupReq- ACSViol-

CESta: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr-

CEMsk: RxErr- BadTLP- BadDLLP- Rollover- Timeout- AdvNonFatalErr+

AERCap: First Error Pointer: 00, ECRCGenCap+ ECRCGenEn- ECRCChkCap+ ECRCChkEn-

MultHdrRecCap- MultHdrRecEn- TLPPfxPres- HdrLogCap-

HeaderLog: 00000000 00000000 00000000 00000000

Capabilities: [300 v1] Secondary PCI Express

LnkCtl3: LnkEquIntrruptEn- PerformEqu-

LaneErrStat: 0

Kernel driver in use: hailo

Kernel modules: hailo_pci

hailortcli fw-control identify:

Executing on device: 0001:01:00.0

Identifying board

Control Protocol Version: 2

Firmware Version: 4.20.0 (release,app,extended context switch buffer)

Logger Version: 0

Board Name: Hailo-8

Device Architecture: HAILO8

Serial Number: <N/A>

Part Number: <N/A>

Product Name: <N/A>