Hello everyone,

I am experiencing performance issues with GStreamer object detection pipelines running on the Raspberry Pi AI HAT+ (Hailo-8) using HailoRT v4.23.0.

When running this simple pipeline that acquire frames from a USB camera and run inference from YOLOv8s HEF, I notice a consistent performance drop over time.

HAILO_MONITOR= 1 gst-launch-1.0 \

v4l2src device=/dev/video0 ! “image/jpeg,width=640,height=480” ! jpegdec ! \

videoscale ! \

videoconvert ! \

queue leaky=no max-size-buffers=3 max-size-bytes=0 max-size-time=0 ! \

hailonet hef-path=/usr/local/hailo/resources/models/hailo8/yolov8s.hef batch-size=2 nms-score-threshold=0.6 nms-iou-threshold=0.45 output-format-type=HAILO_FORMAT_TYPE_FLOAT32 ! \

fakesink -e

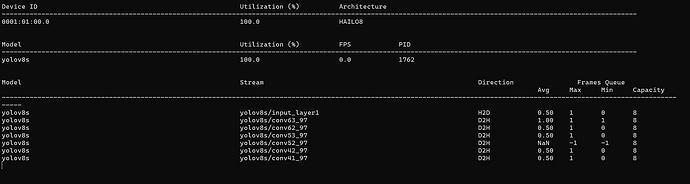

hailortcli monitor shows that the utilization % and FPS start respectively at 19% and 32 FPS, then utilization % slowly increases to reach 100% within a minute.

After reaching the maximum utilization, the FPS value starts decreasing, reaching

- 12 fps after 2 minutes

- 7 fps after 5 minutes

- 0 fps after 10 minutes

However, i run the model benchmark with the command

hailortcli run -t 600 --batch-size=2 /usr/local/hailo/resources/models/hailo8/yolov8s.hef the frame rate is stable at 368 fps.

I tried other HEF models under /usr/local/hailo/resources/models/hailo8and i get the same behaviour.

Any suggestion?

Hi @tognirob ,

1. Queue Configuration with leaky=no

Queue is configured with leaky=no, which means when the queue fills up, it will block upstream elements. This creates backpressure that propagates back to the camera source, causing frames to accumulate in system memory.

2. Batch Size Mismatch

Using batch-size=2 but your pipeline is a single stream. The hailonet element will wait to accumulate 2 frames before inference, adding latency and potentially causing buffer buildup.

3. Missing Output Queue

There’s no queue after hailonet to decouple inference from the sink, which can cause blocking.

4. Float32 Output Format

Using HAILO_FORMAT_TYPE_FLOAT32 increases memory bandwidth significantly compared to native quantized output.

Can you please try:

HAILO_MONITOR=1 gst-launch-1.0 \

v4l2src device=/dev/video0 io-mode=mmap ! \

"image/jpeg,width=640,height=480,framerate=30/1" ! \

jpegdec ! \

videoscale ! \

videoconvert ! \

video/x-raw,format=RGB ! \

queue leaky=downstream max-size-buffers=3 max-size-bytes=0 max-size-time=0 ! \

hailonet hef-path=/usr/local/hailo/resources/models/hailo8/yolov8s.hef batch-size=1 nms-score-threshold=0.6 nms-iou-threshold=0.45 ! \

queue leaky=downstream max-size-buffers=3 max-size-bytes=0 max-size-time=0 ! \

fakesink sync=false -e

Thanks,

Thank you for your reply.

I tried your pipeline and it’s giving me the same issue.

I found out that the problem was caused by exporting the variable HAILO_TRACE=scheduler.

Without that it works perfectly.

1 Like

Thanks @tognirob for sharing with us the solution!