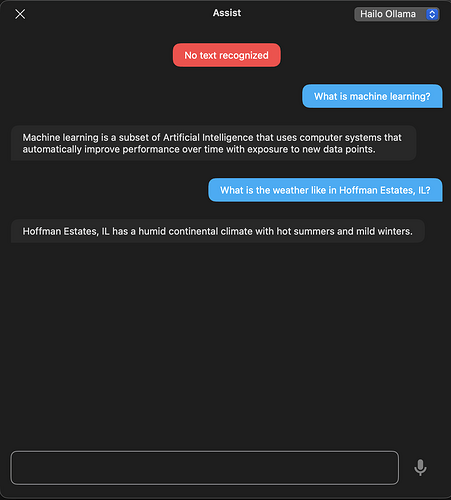

hailo-ollama works for plain chat, but fails (HTTP 500 error) when the request includes tools or a JSON-schema object in format. This prevents using hailo-ollama with Home Assistant Assist, which sends these fields.

Plain chat OK:

curl -sS -X POST "http://<host>:8000/api/chat" -H "Content-Type: application/json" -d '{

"model":"llama3.2:1b",

"messages":[{"role":"user","content":"hello"}],

"stream":false

}'

format schema → 500:

curl -sS -X POST "http://<host>:8000/api/chat" -H "Content-Type: application/json" -d '{

"model":"llama3.2:1b",

"messages":[{"role":"user","content":"Return {\"greeting\":\"...\"} and nothing else."}],

"stream":false,

"format":{"type":"object","properties":{"greeting":{"type":"string"}},"required":["greeting"]}

}'

tools → 500:

curl -sS -X POST "http://<host>:8000/api/chat" -H "Content-Type: application/json" -d '{

"model":"llama3.2:1b",

"messages":[{"role":"user","content":"Call the tool."}],

"stream":false,

"tools":[{"type":"function","function":{"name":"demo_tool","description":"Returns a fixed string","parameters":{"type":"object","properties":{},"required":[]}}}]

}'

Error includes: TreeToObjectMapper::mapString(): Node is NOT a STRING.

Feature request: implement Ollama-compatible tool calling + JSON-schema structured outputs (object format) so Assist integrations work.

Thanks.