Hi,

I am writing a google colab notebook and running Hailo DFC ( hailo_dataflow_compiler-3.33.0-py3-none-linux_x86_64.whl) with ubuntu 22 and python3.10 in colab. I have following error when running hailomz compile.

command:

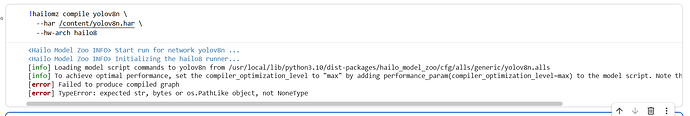

!hailomz compile --ckpt /content/best.onnx --calib-path /content/calib --yaml /usr/local/lib/python3.10/dist-packages/hailo_model_zoo/cfg/networks/yolov8n.yaml --classes 52 --hw-arch hailo8

output:

Start run for network yolov8n …

Initializing the hailo8 runner…

[info] Translation started on ONNX model yolov8n

[info] Restored ONNX model yolov8n (completion time: 00:00:00.07)

[info] Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.27)

[info] Simplified ONNX model for a parsing retry attempt (completion time: 00:00:00.83)

[warning] ONNX shape inference failed: [ONNXRuntimeError] : 2 : INVALID_ARGUMENT : Failed to load model with error: /onnxruntime_src/onnxruntime/core/graph/model_load_utils.h:46 void onnxruntime::model_load_utils::ValidateOpsetForDomain(const std::unordered_map<std::__cxx11::basic_string, int>&, const onnxruntime::logging::Logger&, bool, const std::string&, int) ONNX Runtime only *guarantees* support for models stamped with official released onnx opset versions. Opset 22 is under development and support for this is limited. The operator schemas and or other functionality may change before next ONNX release and in this case ONNX Runtime will not guarantee backward compatibility. Current official support for domain ai.onnx is till opset 21.

[info] According to recommendations, retrying parsing with end node names: [‘/model.22/Concat_3’].

[info] Translation started on ONNX model yolov8n

[info] Restored ONNX model yolov8n (completion time: 00:00:00.04)

[info] Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.23)

[info] NMS structure of yolov8 (or equivalent architecture) was detected.

[info] In order to use HailoRT post-processing capabilities, these end node names should be used: /model.22/cv2.0/cv2.0.2/Conv /model.22/cv3.0/cv3.0.2/Conv /model.22/cv2.1/cv2.1.2/Conv /model.22/cv3.1/cv3.1.2/Conv /model.22/cv2.2/cv2.2.2/Conv /model.22/cv3.2/cv3.2.2/Conv.

[info] Start nodes mapped from original model: ‘images’: ‘yolov8n/input_layer1’.

[info] End nodes mapped from original model: ‘/model.22/Concat_3’.

[info] Translation completed on ONNX model yolov8n (completion time: 00:00:01.21)

[info] Translation started on ONNX model yolov8n

[info] Restored ONNX model yolov8n (completion time: 00:00:00.04)

[info] Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.27)

[info] NMS structure of yolov8 (or equivalent architecture) was detected.

[info] In order to use HailoRT post-processing capabilities, these end node names should be used: /model.22/cv2.0/cv2.0.2/Conv /model.22/cv3.0/cv3.0.2/Conv /model.22/cv2.1/cv2.1.2/Conv /model.22/cv3.1/cv3.1.2/Conv /model.22/cv2.2/cv2.2.2/Conv /model.22/cv3.2/cv3.2.2/Conv.

[info] Start nodes mapped from original model: ‘images’: ‘yolov8n/input_layer1’.

[info] End nodes mapped from original model: ‘/model.22/cv2.0/cv2.0.2/Conv’, ‘/model.22/cv3.0/cv3.0.2/Conv’, ‘/model.22/cv2.1/cv2.1.2/Conv’, ‘/model.22/cv3.1/cv3.1.2/Conv’, ‘/model.22/cv2.2/cv2.2.2/Conv’, ‘/model.22/cv3.2/cv3.2.2/Conv’.

[info] Translation completed on ONNX model yolov8n (completion time: 00:00:00.97)

[info] Appending model script commands to yolov8n from string

[info] Added nms postprocess command to model script.

[info] Saved HAR to: /content/yolov8n.har

Preparing calibration data…

[info] Loading model script commands to yolov8n from /usr/local/lib/python3.10/dist-packages/hailo_model_zoo/cfg/alls/generic/yolov8n.alls

[info] Loading model script commands to yolov8n from string

[info] Found model with 3 input channels, using real RGB images for calibration instead of sampling random data.

[info] Starting Model Optimization

[warning] Reducing optimization level to 0 (the accuracy won’t be optimized and compression won’t be used) because there’s no available GPU

[warning] Running model optimization with zero level of optimization is not recommended for production use and might lead to suboptimal accuracy results

[info] Model received quantization params from the hn

[info] MatmulDecompose skipped

[info] Starting Mixed Precision

[info] Model Optimization Algorithm Mixed Precision is done (completion time is 00:00:00.55)

[info] LayerNorm Decomposition skipped

[info] Starting Statistics Collector

[info] Using dataset with 64 entries for calibration

Calibration: 100% 64/64 [00:31<00:00, 2.01entries/s]

[info] Model Optimization Algorithm Statistics Collector is done (completion time is 00:00:33.27)

[info] Starting Fix zp_comp Encoding

[info] Model Optimization Algorithm Fix zp_comp Encoding is done (completion time is 00:00:00.00)

[info] Matmul Equalization skipped

[info] Starting MatmulDecomposeFix

[info] Model Optimization Algorithm MatmulDecomposeFix is done (completion time is 00:00:00.00)

[info] Finetune encoding skipped

[info] Bias Correction skipped

[info] Adaround skipped

[info] Quantization-Aware Fine-Tuning skipped

[info] Layer Noise Analysis skipped

[info] Model Optimization is done

[info] Saved HAR to: /content/yolov8n.har

[info] Loading model script commands to yolov8n from /usr/local/lib/python3.10/dist-packages/hailo_model_zoo/cfg/alls/generic/yolov8n.alls

[info] To achieve optimal performance, set the compiler_optimization_level to “max” by adding performance_param(compiler_optimization_level=max) to the model script. Note that this may increase compilation time.

[error] Failed to produce compiled graph

[error] TypeError: expected str, bytes or os.PathLike object, not NoneType

These errors appear. I don’t know the reason.

when I run the same command locally in my computer with a 1050TI GPU then the output is following.

(convert_to_hailo) dharam@DESKTOP-4L30DS0:~$ hailomz compile --ckpt data/hailo_docker/shared_with_docker/doc/best.onnx --calib-path data/hailo_d

ocker/shared_with_docker/doc/calib/ --yaml anaconda3/envs/convert_to_hailo/lib/python3.10/site-packages/hailo_model_zoo/cfg/networks/yolov8n.ya

ml --classes 52 --hw-arch hailo8

[info] No GPU chosen, Selected GPU 0

Start run for network yolov8n …

Initializing the hailo8 runner…

[info] Translation started on ONNX model yolov8n

[info] Restored ONNX model yolov8n (completion time: 00:00:00.11)

[info] Extracted ONNXRuntime meta-data for Hailo model (completion time: 00:00:00.34)

[info] Simplified ONNX model for a parsing retry attempt (completion time: 00:00:01.01)

Traceback (most recent call last):

File “/home/dharam/anaconda3/envs/convert_to_hailo/bin/hailomz”, line 7, in

sys.exit(main())

File “/home/dharam/anaconda3/envs/convert_to_hailo/lib/python3.10/site-packages/hailo_model_zoo/main.py”, line 122, in main

run(args)

File “/home/dharam/anaconda3/envs/convert_to_hailo/lib/python3.10/site-packages/hailo_model_zoo/main.py”, line 111, in run

return handlersargs.command

File “/home/dharam/anaconda3/envs/convert_to_hailo/lib/python3.10/site-packages/hailo_model_zoo/main_driver.py”, line 248, in compile

_ensure_optimized(runner, logger, args, network_info)

File “/home/dharam/anaconda3/envs/convert_to_hailo/lib/python3.10/site-packages/hailo_model_zoo/main_driver.py”, line 73, in _ensure_optimized

_ensure_parsed(runner, logger, network_info, args)

File “/home/dharam/anaconda3/envs/convert_to_hailo/lib/python3.10/site-packages/hailo_model_zoo/main_driver.py”, line 108, in _ensure_parsed

parse_model(runner, network_info, ckpt_path=args.ckpt_path, results_dir=args.results_dir, logger=logger)

File “/home/dharam/anaconda3/envs/convert_to_hailo/lib/python3.10/site-packages/hailo_model_zoo/core/main_utils.py”, line 126, in parse_model

raise Exception(f"Encountered error during parsing: {err}") from None

Exception: Encountered error during parsing: ‘/Cast_5_output_0_value’

Please help.